Samsung HBM4 Enters the Vera Rubin Era as Commercial Shipments Hit 11.7 Gbps and Target 13.0 Gbps Headroom

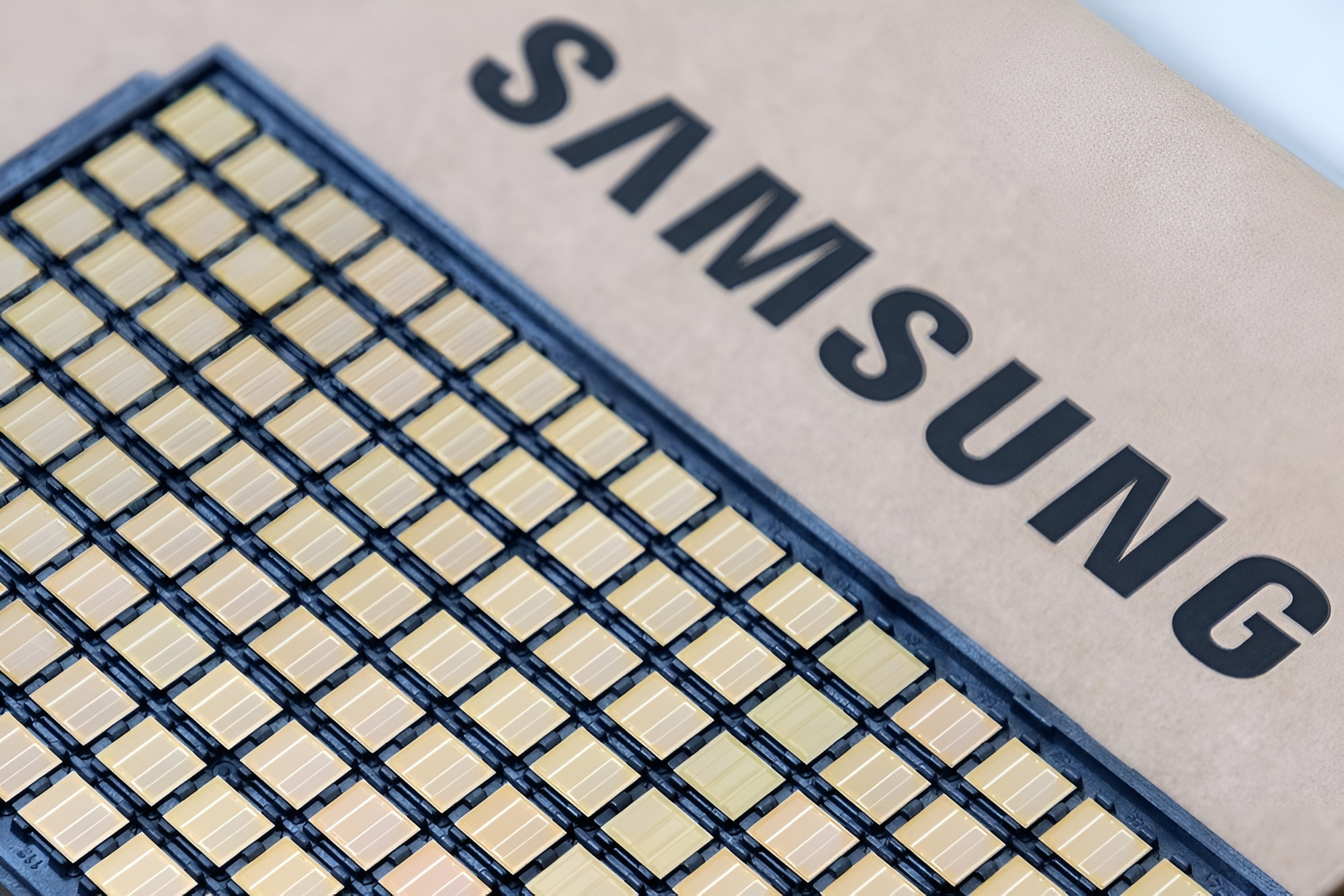

Samsung is putting itself back in the center of the high bandwidth memory race, confirming that its HBM4 is now in mass production and being shipped as a commercial product. In its latest announcement, Samsung positions HBM4 as a new performance and efficiency step for AI computing, built on its 6th generation 10 nanometer class DRAM process known as 1c alongside a 4 nanometer logic die sourced internally.

From a market perspective, the timing matters. HBM supply has become one of the most strategic chokepoints in modern AI infrastructure, and HBM4 is where the competitive landscape starts to reshuffle across Samsung, SK hynix, and Micron. Samsung’s messaging here is clear: it wants to be viewed not as a follower, but as a supplier ready for the next wave of accelerator platforms where bandwidth, capacity, and power efficiency define real world throughput.

While Samsung does not name specific customers in its post, the industry context makes the connection to NVIDIA’s next platform cycle hard to ignore, especially as NVIDIA’s next generation roadmap emphasizes faster inference, lower latency, and higher sustained throughput. HBM is one of the decisive variables in that equation, because memory bandwidth and power efficiency increasingly determine how well large models scale under real deployment pressure.

Samsung highlights that its HBM4 delivers a consistent 11.7 Gbps pin speed, which it describes as about 46 percent higher than an 8 Gbps reference point. It also states that performance can be further enhanced up to 13.0 Gbps, framing this as additional headroom to mitigate data bottlenecks as AI workloads scale.

On bandwidth and capacity, Samsung states a maximum of 3.3 TB per second bandwidth per stack, and it is shipping 12 layer HBM4 stacks in 24 GB to 36 GB capacities, while developing 16 layer stacks that would expand capacity up to 48 GB per stack. In practical terms, that capacity and bandwidth trajectory aligns with what next generation accelerators like Vera Rubin will need to keep more compute fed more consistently, especially in inference heavy environments where every stall has a direct cost impact.

Samsung also frames HBM4 as a solution to the power and thermal challenges created by doubling I O from 1,024 pins to 2,048 pins. The company says it achieved a 40 percent improvement in power efficiency through low voltage TSV and PDN optimization, while improving thermal resistance by 10 percent and heat dissipation by 30 percent compared to HBM3E. Whether you are building hyperscale training clusters or inference racks, this is the point where memory is no longer only a performance spec. It is a total cost of ownership lever.

Samsung also projects aggressive momentum, stating it anticipates HBM sales will more than triple in 2026 compared to 2025, and it expects HBM4E sampling to begin in the second half of 2026. If Samsung can translate its shipment milestone into broad qualification wins, HBM4 becomes more than a product. It becomes Samsung’s re entry ticket into the highest margin lane of the memory market.

The bigger takeaway is that Vera Rubin class platforms are going to be judged on system level throughput, not just compute. If Samsung’s HBM4 is truly meeting the speed, capacity, and efficiency targets it is advertising, then this is the kind of supply chain shift that can materially influence how quickly next generation AI infrastructure scales.

Do you see HBM4 capacity, bandwidth, and efficiency as the biggest limiter for next generation AI accelerators, or will networking and rack level power delivery remain the true bottleneck even as memory improves?