NVIDIA Says Blackwell Cuts AI Cost Per Token by Up to 10x Versus Hopper, Spotlighting GB200 NVL72 and MoE Parallelism

NVIDIA is positioning its Blackwell platform as a major inflection point for inference economics, claiming it can reduce cost per token by up to 10x compared with the Hopper generation when paired with optimized inference stacks. In a new company post, NVIDIA frames tokenomics as the core unit economics problem behind scaling modern AI across healthcare workflows, interactive gaming dialogue, and customer service agents, arguing that infrastructure efficiency is now the fastest lever for making frontier level intelligence affordable at scale. NVIDIA Blackwell inference token cost post.

The headline claim is anchored on inference providers including Baseten, DeepInfra, Fireworks AI, and Together AI, which NVIDIA says are using Blackwell to drive large cost per token reductions across industry workloads. NVIDIA highlights that these providers host advanced open source models and combine them with Blackwell hardware software co design plus their own optimized inference stacks to push down latency and operating cost.

NVIDIA then breaks the story into concrete case studies that map directly to high volume inference patterns.

In healthcare, NVIDIA points to Sully.ai running open source models through Baseten’s deployment stack on Blackwell, with NVIDIA stating the result was a 90% reduction in inference costs, described as a 10x reduction versus a prior closed source implementation, alongside a 65% improvement in response time for key workflows.

For gaming adjacent workloads, NVIDIA highlights Latitude and DeepInfra, describing how MoE model serving benefits from Blackwell’s low precision path and platform characteristics. NVIDIA specifically notes an example where cost per million tokens dropped from 20 cents on Hopper to 10 cents on Blackwell, then to 5 cents with native NVFP4, totaling a 4x improvement while maintaining expected accuracy.

For multi agent and agentic chat workloads, NVIDIA cites Sentient using Fireworks AI, stating Sentient achieved 25 to 50 percent better cost efficiency compared with its Hopper based deployment after moving to a Blackwell optimized inference stack.

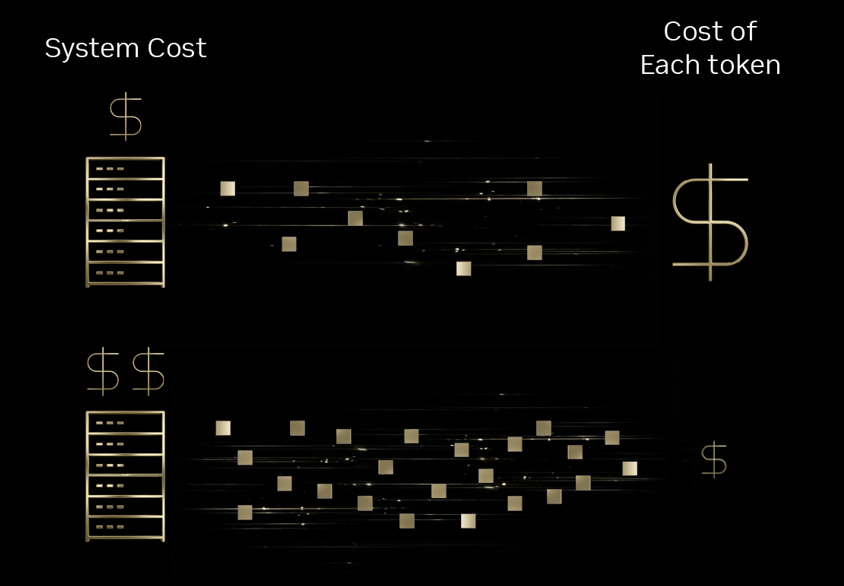

On the platform side, NVIDIA attributes a major share of the tokenomics gain to what it calls extreme co design across compute, networking, and software, and it explicitly calls out GB200 NVL72 as the system that scales this impact further. NVIDIA describes GB200 NVL72 as delivering a breakthrough 10x reduction in cost per token for reasoning MoE models compared with Hopper, positioning expert parallelism and high bandwidth communication as the operational backbone that keeps sparse MoE execution efficient at rack scale.

The strategic takeaway is clear. NVIDIA is not just selling faster GPUs, it is selling a business case where token output scales faster than infrastructure cost, which in turn unlocks new product tiers for anyone building AI features that must respond quickly and run continuously. That is especially relevant for gaming and live service ecosystems where every player action can trigger inference, making cost per token a direct driver of whether AI systems feel like a premium feature or a sustainable default.

Do you think a 10x drop in cost per token will make AI powered gameplay and NPC dialogue mainstream in 2026, or will latency and safety constraints still keep most games on scripted content?