Samsung Begins Mass Production and Shipments of HBM4

Samsung has officially begun mass production and commercial shipments of its next generation HBM4 memory, positioning itself to capture early leadership as the AI compute market shifts toward higher bandwidth and higher capacity stacks. The announcement comes directly from Samsung’s newsroom release, which confirms HBM4 is now shipping to customers and outlines the performance, efficiency, and thermal improvements designed to support the next wave of datacenter GPUs and AI accelerators.

From a platform strategy perspective, this matters because HBM is no longer a niche memory tier. It is becoming one of the primary constraints on AI scaling, with bandwidth and power efficiency increasingly acting as performance multipliers for modern accelerators. Samsung is explicitly framing HBM4 as a solution to data bottlenecks that intensify as models grow, while also addressing the practical realities that come with more I O pins, higher speeds, and tighter thermal envelopes.

Samsung states its HBM4 delivers a consistent processing speed of 11.7 gigabits per second, exceeding an 8 gigabits per second baseline by about 46 percent. Samsung also says HBM4 performance can be further enhanced up to 13.0 gigabits per second, positioning that as a way to reduce data bottlenecks as AI workloads scale.

On bandwidth, Samsung claims total memory bandwidth per stack increases to a maximum of 3.3 terabytes per second, described as 2.7 times higher than HBM3E.

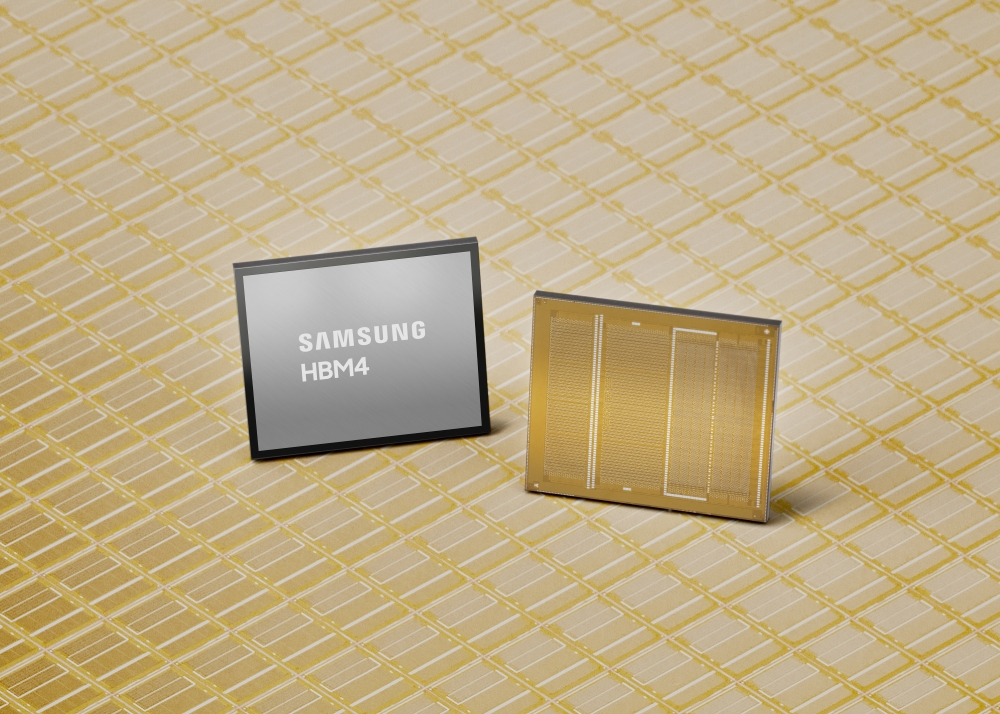

For capacity, Samsung outlines a 12 layer stack offering ranging from 24 gigabytes to 36 gigabytes, with a 16 layer path expanding capacity options up to 48 gigabytes.

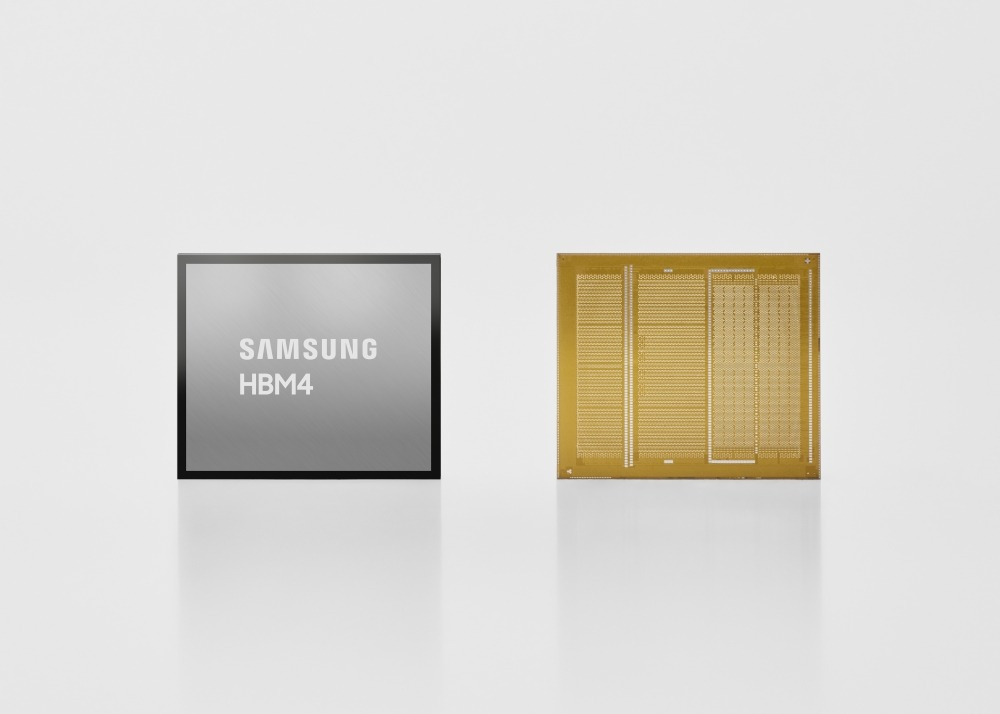

The company also highlights process technology and integration details, including 10 nanometer class DRAM process technology labeled 1c, a 4 nanometer logic process, and an interface shift that doubles I O from 1,024 to 2,048 pins.

Samsung is putting a major spotlight on efficiency, stating HBM4 achieves a 40 percent improvement in power efficiency. The company attributes this to advanced low power design in the core die, low voltage TSV technology, and power distribution network optimization.

On thermal behavior, Samsung claims a 10 percent improvement in thermal resistance and a 30 percent improvement in heat dissipation versus HBM3E, positioning these gains as important for managing the heat and power challenges driven by the jump to 2,048 pins.

For gamers, this is not just datacenter tech theater. The AI accelerator roadmap is a leading indicator for what eventually trickles down into consumer GPUs and next generation game development pipelines, particularly for AI assisted rendering, simulation, and content creation workflows. When the top end of compute gets more bandwidth efficient, it creates headroom for heavier real time systems that can influence future engines, tools, and even how studios build worlds.

Samsung also provides forward guidance on its roadmap. The company expects HBM4E sampling to begin in the second half of 2026, and says custom HBM samples will start reaching customers in 2027 based on their specifications. Samsung additionally anticipates its HBM sales will more than triple in 2026 compared to 2025 and states it is proactively expanding HBM4 production capacity.

The near term competitive story is simple. Mass production and shipments are the moment the conversation shifts from specs to execution. Yield stability, packaging throughput, and customer qualification cycles will define who actually wins sockets in the next accelerator generation, and Samsung is signaling it wants to be in that first wave with a commercial product ready now.

Do you think HBM4 bandwidth and 48 GB stacks will be the key differentiator for next generation GPUs, or will power and cooling constraints remain the real bottleneck even with these efficiency gains?