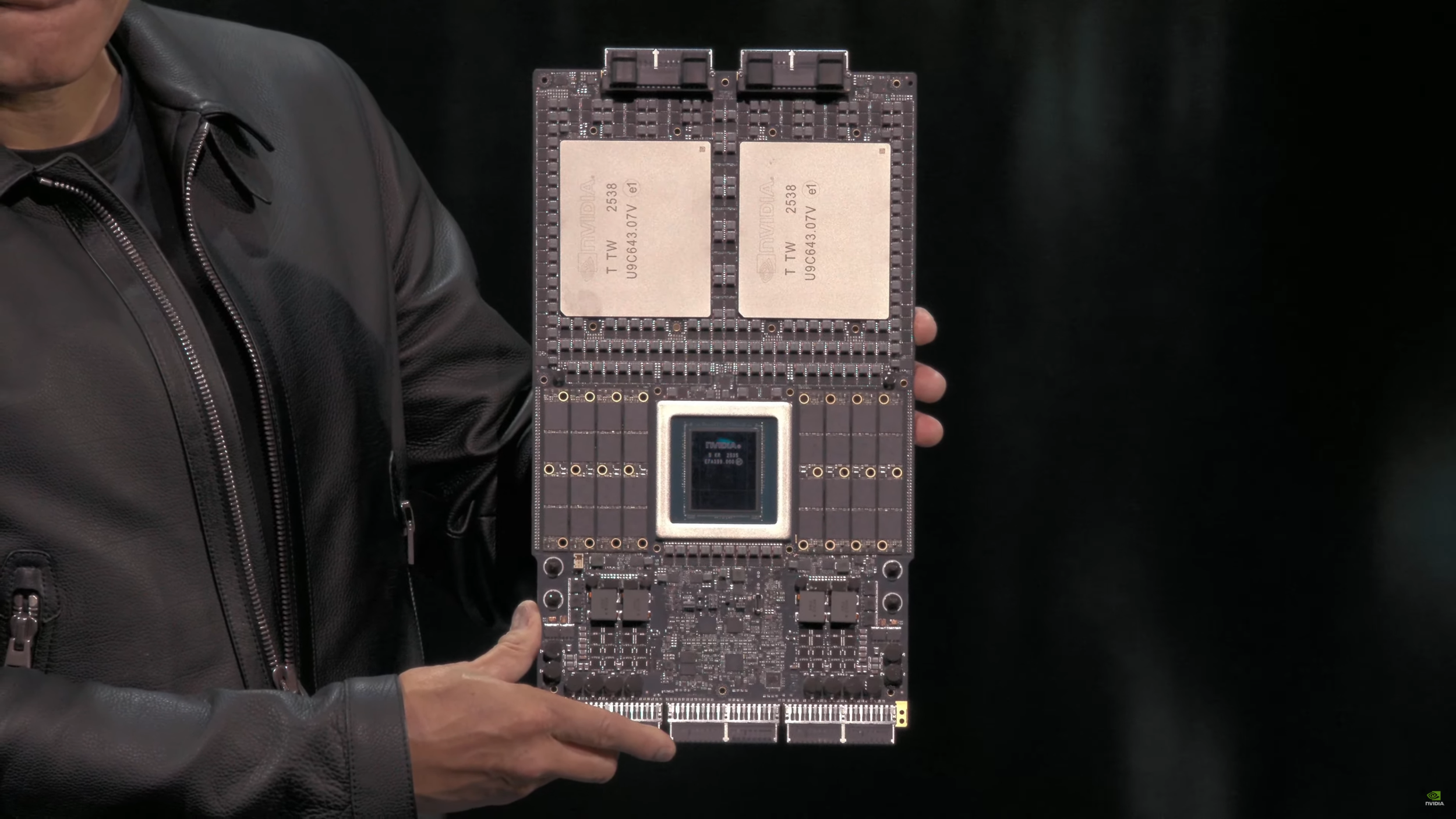

NVIDIA Says Rubin Is In Full Production In Q1 2026 After 3 Years Of Development

NVIDIA’s Rubin AI lineup is now confirmed to be under full production in Q1 2026, and the timeline shift is one of the most meaningful signals from CES 2026 for anyone tracking how fast the data center AI race is escalating. NVIDIA states Rubin has been in development for 3 years, and that production was progressing in parallel with Blackwell, which helps explain how the company can keep compressing generational transitions while still delivering broader platform level upgrades across compute, interconnect, and networking.

What stands out here is not only Rubin entering full production earlier than expected, but what this suggests about NVIDIA’s execution model. In the same breath, the company’s recent cadence shows Blackwell ramping in H2 2025 and Blackwell Ultra mass production starting in Q3 2025. With Rubin now in full production in Q1 2026, NVIDIA is effectively tightening the cycle between major platform waves, turning AI infrastructure into a live service style roadmap where continuous iteration and faster deployment windows become competitive advantages, especially when hyperscalers are optimizing around utilization, token cost, and time to scale.

NVIDIA is also explicitly positioning Vera Rubin based availability through partners in H2 2026, with an initial cloud deployment list that includes AWS, Google Cloud, Microsoft, and OCI, plus NVIDIA Cloud Partners such as CoreWeave, Lambda, Nebius, and Nscale. From an ecosystem perspective, this matters because it frames Rubin not as a lab milestone but as a ready to commercialize platform with multiple routes to market, spanning hyperscaler instances and specialized AI cloud providers.

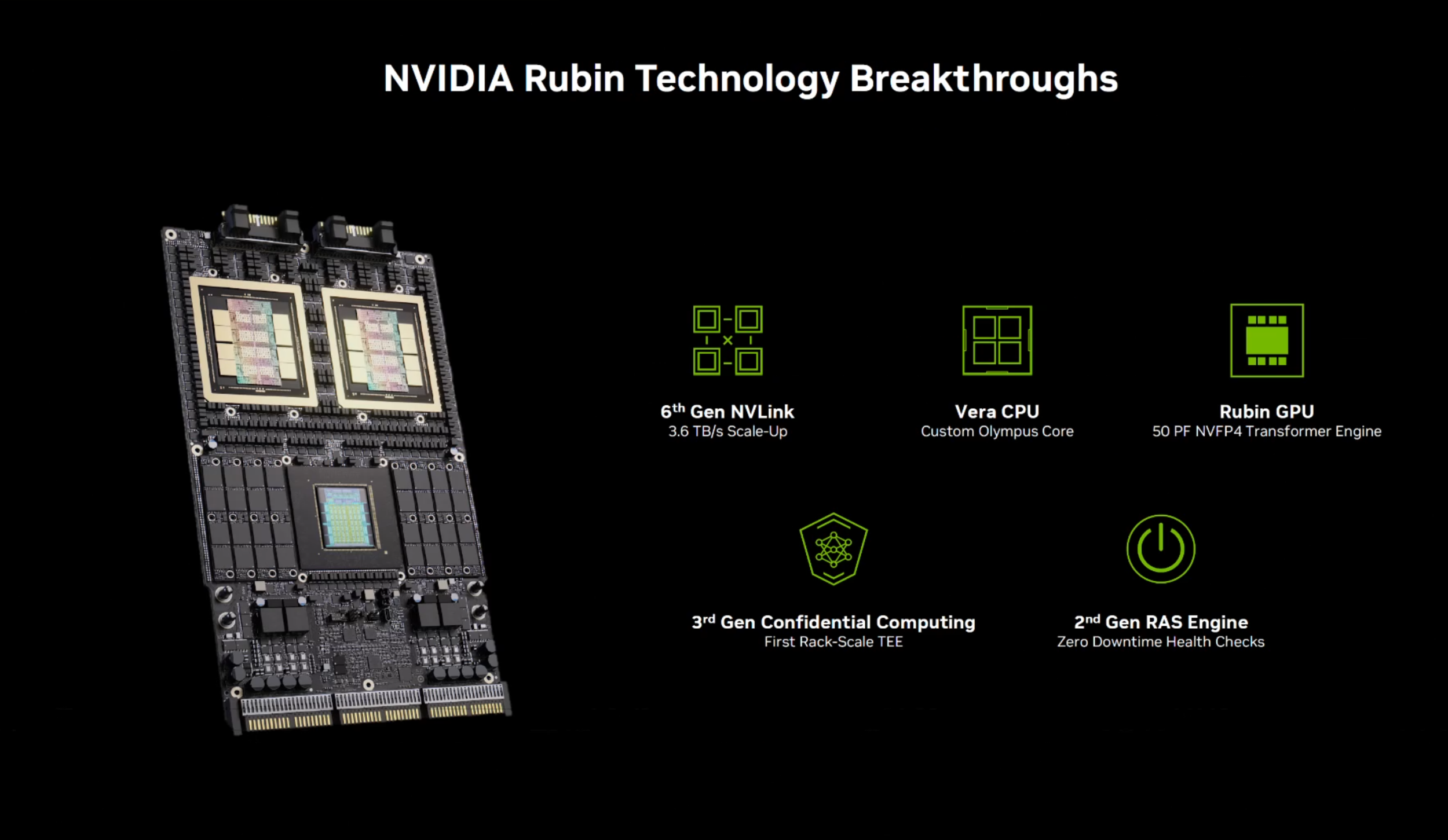

At the platform level, NVIDIA continues to describe Rubin as a 6 chip lineup that is already back from fabrication and ready for volume production, including the Rubin GPU with 336 billion transistors, the Vera CPU with 227 billion transistors, NVLink 6 switch silicon for interconnect, CX9 and BF4 for networking, and Spectrum X 102.4T CPO for silicon photonics. The strategic takeaway is straightforward. NVIDIA is widening the definition of the GPU generation into a full stack architecture refresh, and the production timing suggests the company wants Rubin to become a mainstream revenue driver alongside ongoing Blackwell Ultra shipments through H2 2026.

For the AI industry, this is the kind of announcement that changes planning horizons. If you are a cloud buyer, an enterprise running internal training, or a neocloud trying to win customers on performance per dollar, Rubin’s earlier production window pulls forward procurement cycles and increases pressure to keep infrastructure choices flexible. If you are a competitor, it raises the bar on how quickly a platform can move from roadmap to production scale. And for enthusiasts watching the silicon arms race like it is the esports finals, this is NVIDIA speed running the next generation.

Do you see NVIDIA’s accelerated cadence as sustainable, or does it risk creating a constant upgrade treadmill for data centers trying to maximize ROI?