NVIDIA and SK hynix Co Develop AI SSD to Transform Inference Performance and NAND Architecture

As artificial intelligence workloads continue their industry wide transition from training centric pipelines to inference driven deployment, hardware architectures are being rapidly re evaluated to meet new performance and efficiency demands. Low latency access, extreme throughput, and energy efficiency are now critical requirements at scale. According to a report from Chosun Biz, NVIDIA and SK hynix are jointly developing a next generation solid state storage solution internally referred to as Storage Next, widely described as an AI optimized SSD.

The initiative follows NVIDIA’s recent architectural direction, including the integration of general purpose GDDR7 memory into its upcoming Rubin CPX GPUs to accelerate prefill operations. A similar philosophy is now being applied to NAND flash. Rather than treating storage as a passive backend, the AI SSD is designed to act as a high performance pseudo memory layer purpose built for inference workloads that require constant access to massive model parameters.

According to the report, SK hynix is expected to present a working prototype by the end of next year. Performance targets are exceptionally aggressive, with the AI SSD reportedly capable of scaling up to one hundred million IOPS. This figure far exceeds the capabilities of current enterprise class SSDs and signals a fundamental rethink of how NAND, controllers, and data pathways are architected for AI systems.

The technical motivation behind Storage Next is increasingly clear. Modern inference workloads demand continuous high speed access to vast parameter sets that cannot be fully resident in HBM or conventional DRAM due to capacity, cost, and power constraints. By introducing an AI optimized storage tier that behaves closer to memory than traditional SSDs, NVIDIA and SK hynix aim to reduce data movement overhead, improve throughput, and significantly enhance energy efficiency across large scale inference deployments.

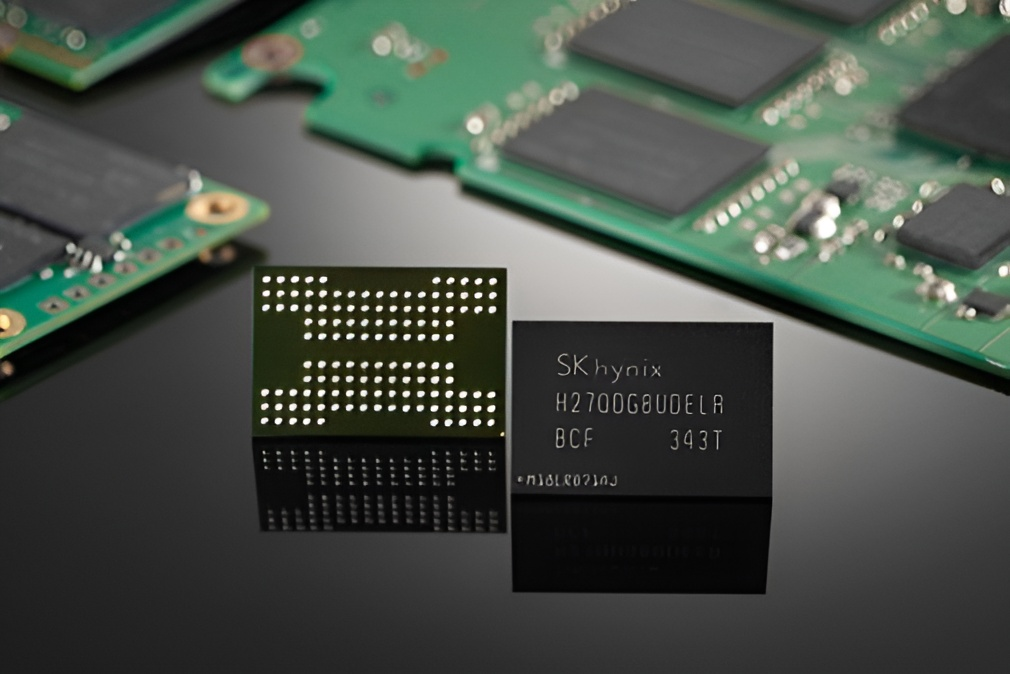

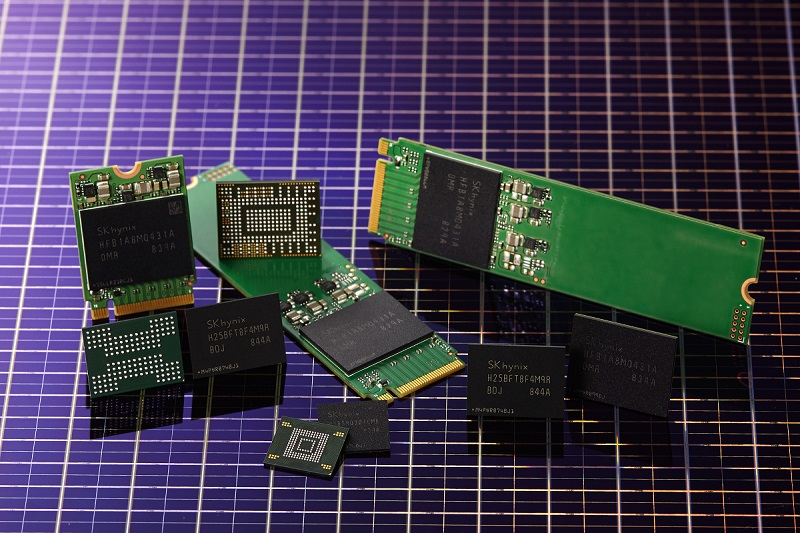

Industry sources indicate that the collaboration focuses on advanced NAND designs paired with specialized controller architectures tuned specifically for AI access patterns. While the project remains internal and early stage, its implications for the broader memory and storage ecosystem are substantial. If AI SSDs reach production and gain adoption among cloud service providers and hyperscalers, NAND flash demand could surge in ways similar to what DRAM has experienced throughout the AI boom.

This prospect raises serious supply chain considerations. NAND manufacturing lines are already under sustained pressure due to growing demand from data centers, enterprise storage, and AI infrastructure expansion. The introduction of a mainstream AI SSD category could further tighten supply, potentially triggering pricing dynamics comparable to the current DRAM market, where contract prices continue to climb with limited short term relief.

What emerges is a familiar pattern in the AI era. Enabling next generation workloads comes at the cost of rapid and often disruptive shifts in supply demand balance. Suppliers face immense pressure to scale capacity and innovate simultaneously, while downstream customers must adapt to volatile pricing and constrained availability. With DRAM already experiencing significant pricing stress, NAND flash now appears positioned as the next pressure point.

If Storage Next evolves from an internal project into a deployed platform technology, it may redefine the role of NAND in AI systems while simultaneously reshaping the economics of the storage market.

Do you see AI optimized SSDs becoming a standard layer in future inference stacks, or will supply constraints limit their real world adoption?