Intel Diamond Rapids Xeon CPUs Add CBB Compute Tiles and IMH I O Plus IMC Tiles

New Linux kernel patch notes have surfaced that shed light on Intel’s next generation Diamond Rapids Xeon design philosophy, specifically how the uncore and monitoring stack will be structured across tiles. The details appear in an Intel authored submission on Kernel patches, and they point to a deliberate architectural split between compute and memory control that differs from the more integrated approach used on Granite Rapids.

The key takeaway is that Diamond Rapids introduces 2 new tiles that reshape where critical functions live. Intel is adding a CBB tile, short for Core Building Block, which serves as the compute tile. Unlike Granite Rapids where the integrated memory controller was brought onto the same tile, Diamond Rapids appears to separate that responsibility out. The integrated memory controller is instead placed on a new IMH tile, short for Integrated I O and Memory Hub, which is described as combining I O and memory hub functions into a dedicated tile rather than mixing them directly into the compute tile.

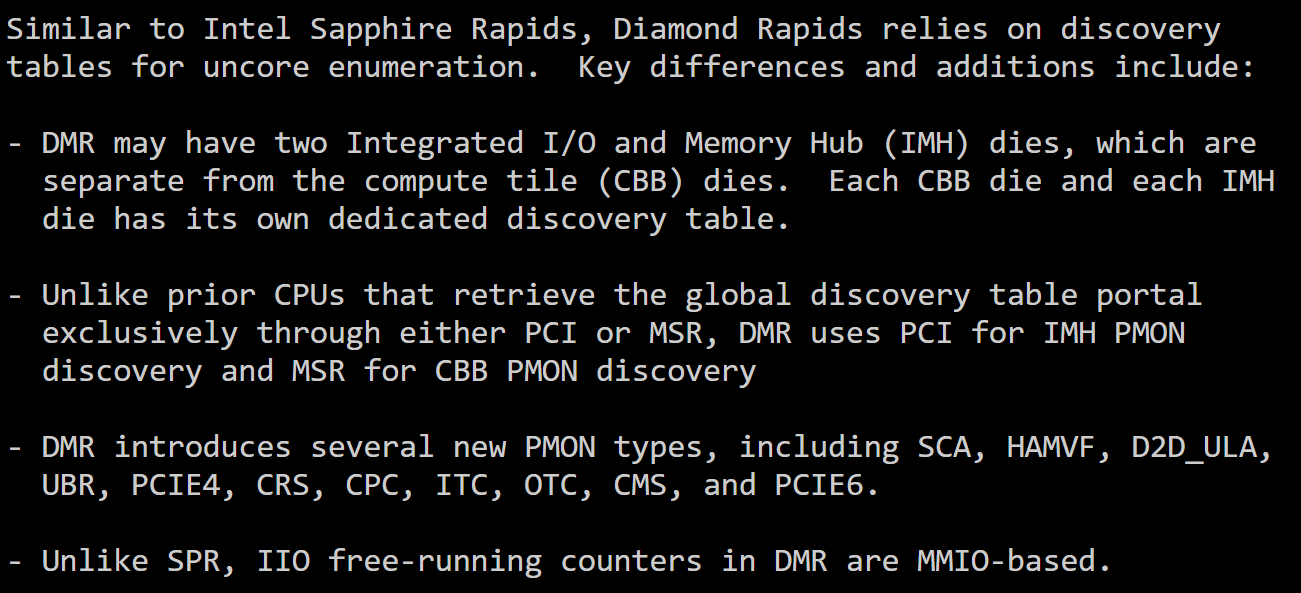

According to the kernel patch text, Diamond Rapids may include up to 2 IMH dies, with each CBB die and each IMH die having its own dedicated discovery table. This matters for platform bring up and tooling because discovery tables are foundational to uncore enumeration, performance monitoring, and system level introspection. It also suggests Intel is leaning into a more modular multi tile strategy where monitoring and discovery are tile aware, not just platform wide.

A separate observation shared by InstLatX64 on X suggests the IMH die may sit on the base tile in a placement approach similar to Clearwater Forest. If accurate, that could imply a packaging and routing strategy optimized for predictable I O and memory access paths while keeping compute tiles more cleanly focused on core scaling.

The kernel patch also outlines notable monitoring and enumeration behavior that helps explain what Intel is changing at the platform layer:

Diamond Rapids may use PCI for IMH PMON discovery and MSR for CBB PMON discovery, rather than relying on a single method for the global discovery table portal.

Diamond Rapids introduces multiple new PMON types, including SCA, HAMVF, D2D ULA, UBR, PCIE4, CRS, CPC, ITC, OTC, CMS, and PCIE6.

Unlike Sapphire Rapids, IIO free running counters in Diamond Rapids are described as MMIO based.

Insightful Linux kernel patch:#Intel #DiamondRapids CPU chip = Core Building Block (CBB) and unlike #GNR they do not have an IMC.#DMR can have a chip with two "Integrated I/O and one memory hub (IMH)".

— InstLatX64 (@InstLatX64) January 2, 2026

DMR goes even further than #CWF the IMC is on the Base Tile.

1/2 pic.twitter.com/0jHPUD3hJX

All of this implies Intel is investing in a more granular and scalable uncore discovery and monitoring model that aligns with multi tile growth, multi socket deployments, and next generation I O expansion. The appearance of PCIE6 in the monitoring types is also consistent with the industry direction for next generation data center platforms, and it supports the claim that PCIe Gen6 is part of the Diamond Rapids era platform story.

Beyond tile design, the broader rumor and platform chatter around Diamond Rapids continues to orbit around extreme scale targets. Previous reports have floated up to 192 cores, with some speculation extending to 256 cores, alongside the expectation that the chips will use Intel 18A and Panther Cove P cores. Early platform discussions also point to up to 650 W class TDP targets on an LGA 9324 platform with multi socket capability, and a potential introduction window in mid 2026 to the second half of 2026. Intel has not confirmed most of these figures publicly yet, so the most grounded items in this update remain the tile naming, discovery table behavior, and monitoring details documented in the kernel patch itself.

If the CBB and IMH separation holds through final silicon, Diamond Rapids could represent a meaningful step in how Intel balances core scaling with memory and I O flexibility, particularly for AI and HPC racks where PCIe Gen6 readiness and memory subsystem behavior will be major platform differentiators.

Do you prefer Intel splitting memory and I O into a dedicated IMH tile for scalability, or would you rather see the memory controller stay closer to compute like Granite Rapids for potentially simpler latency tuning?