Intel and NVIDIA Confirm Custom Xeon With NVLink, Intel Reaffirms 16 Channel Diamond Rapids, Coral Rapids With SMT, and Nova Lake Landing Late 2026

Intel used its Q4 2025 financial results to reframe its near term client and data center roadmap around what it says customers are demanding right now, while also spotlighting a headline partnership move with NVIDIA. In the same update where Intel reported 13.7B$ in Q4 2025 revenue, down 4% year over year, CEO Lip Bu Tan described ongoing supply constraints and said Intel is aiming for improved stability by Q2 2026. The bigger signal for the industry, however, is that Intel is narrowing its server focus, accelerating the next node of Xeon, and building a custom Xeon CPU fully integrated with NVIDIA NVLink technology for AI host nodes.

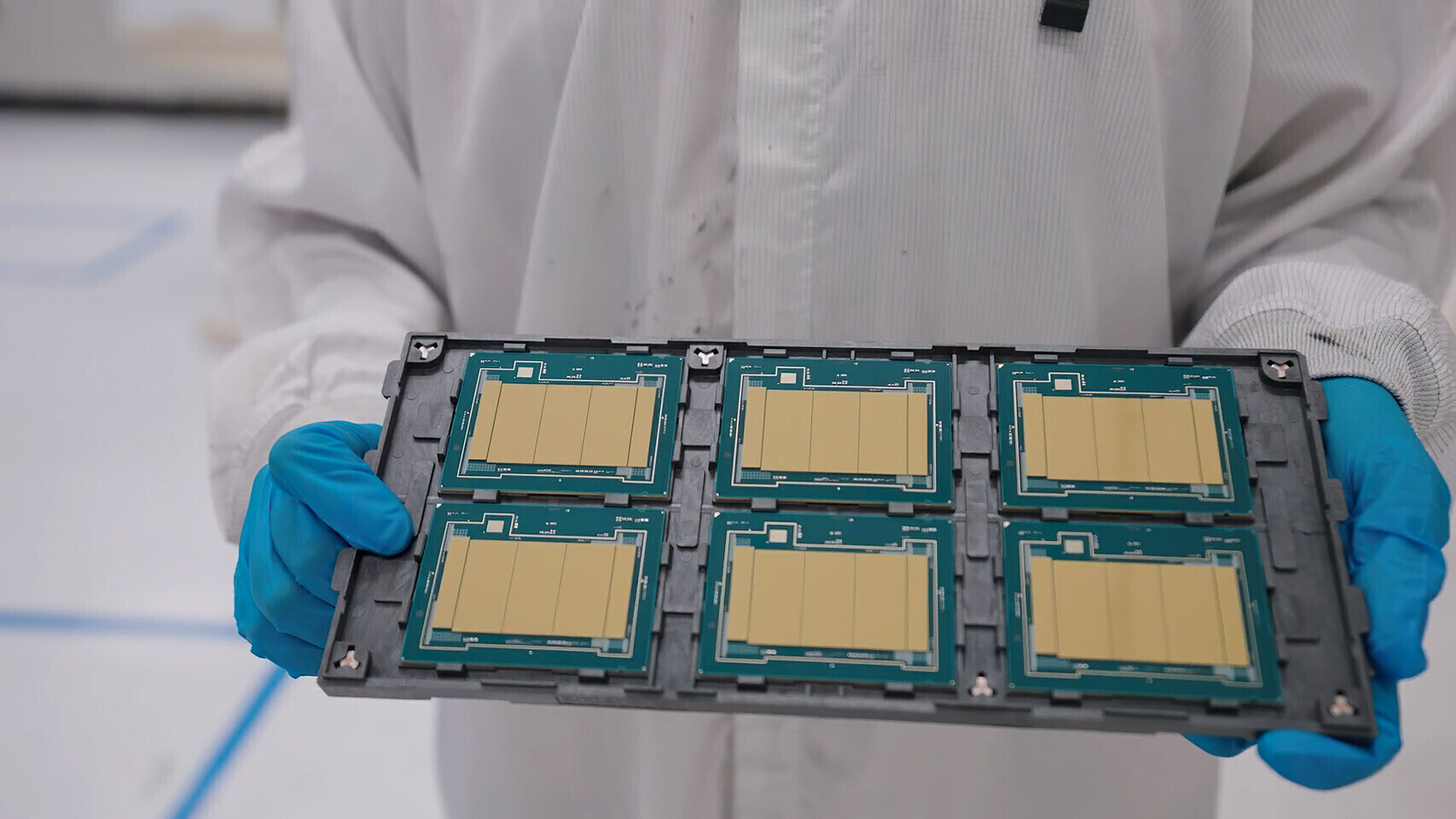

On the server side, Intel says Granite Rapids is continuing to ramp with strong customer response, while Sapphire Rapids and Emerald Rapids remain positioned to serve mainstream server deployments. The major roadmap realignment is what comes next. Intel is now laser focused on a 16 channel Diamond Rapids Xeon design, explicitly simplifying the server lineup and removing the 8 channel mainstream Diamond Rapids variant from the roadmap. In practical terms, that is Intel concentrating resources on the highest performance configuration, which is the segment that matters most for hyperscaler and AI infrastructure refresh cycles where memory bandwidth and platform scale have become strategic differentiators.

Following Diamond Rapids, Intel says Coral Rapids will bring back SMT, restoring multithreading to the Xeon roadmap. That statement is a direct tell that Intel wants to re establish competitiveness in core and thread throughput, especially as AI server nodes increasingly demand strong CPU side scheduling, feeding, and orchestration alongside accelerators. If Intel executes cleanly, Coral Rapids becomes the moment where Xeon tries to claw back leadership perception in the data center stack, not just keep pace.

The most strategically loaded disclosure is Intel’s confirmation that it is working closely with NVIDIA on a custom Xeon CPU that is fully integrated with NVIDIA NVLink technology. This is not framed as a translation layer or a loose platform handshake. The way Intel describes it, the goal is best in class x86 performance for AI host nodes while enabling tighter coupling to NVIDIA GPU platforms. For hyperscalers, this kind of integration is about reducing friction, improving interconnect efficiency, and giving infrastructure teams more options to mix and match at scale as they design systems around Blackwell era and future GPU generations.

From a market positioning viewpoint, Intel is essentially pitching a more unified CPU plus GPU host node story that aligns with where AI infrastructure dollars are landing today. It is also a signal that Intel is willing to partner aggressively to remain central in AI server architectures, even when NVIDIA’s ecosystem gravity is the primary force in the room.

On the client side, Intel highlighted its Core Ultra Series 3 launch, codenamed Panther Lake, positioning it as its most broadly adopted and globally available AI PC platform ever, backed by 200 plus notebook designs with OEM partners. Intel also reaffirmed that Nova Lake is planned for late 2026, with the company pitching it as a best in class performance and cost optimized step for both desktop and notebook segments.

Put together, Intel’s message is a two track execution plan. On one track, stabilize supply and keep momentum with Panther Lake in the near term. On the other, concentrate server engineering on 16 channel Diamond Rapids, accelerate Coral Rapids with SMT, and unlock an NVIDIA aligned AI host node offering via the custom Xeon plus NVLink integration.

What do you think is the bigger unlock for Intel’s comeback narrative, the 16 channel Diamond Rapids focus, or the custom Xeon with NVLink designed to sit directly in NVIDIA powered AI host nodes?