AMD Unlocks Local 128B LLM Support with Ryzen AI MAX+ Processors and 96 GB Graphics Memory for PCs

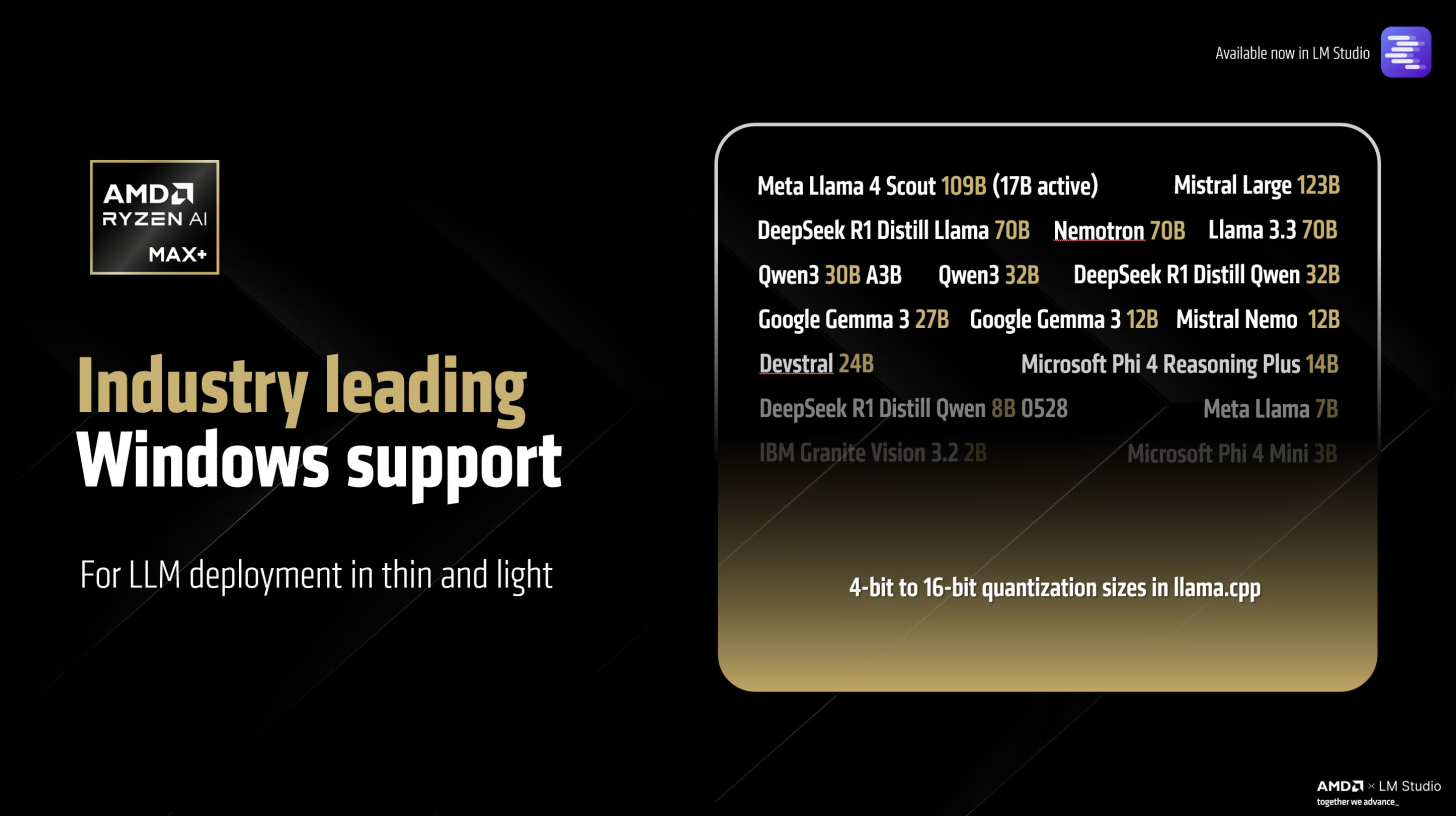

AMD has taken a major leap forward in edge AI computing with its latest Adrenalin Edition 25.8.1 driver, which introduces local support for 128-billion parameter LLMs on consumer PCs powered by its Ryzen AI MAX+ processors. This groundbreaking capability enables powerful models like Meta's Llama 4 Scout to run entirely offline, marking a significant achievement in AI accessibility for everyday users.

In a detailed announcement from AMD, the company revealed that through Variable Graphics Memory (VGM), systems equipped with Ryzen AI MAX+ can now allocate up to 96 GB of graphics memory to the integrated GPU. This amount of available memory allows large-scale LLMs, such as Mixture-of-Experts (MoE) models, to function efficiently on PCs without relying on cloud infrastructure. Although models like Llama 4 Scout use only 17B parameters at runtime, the ability to pre-load 128B parameter models ensures impressive token-per-second (TPS) throughput and seamless performance in real-world applications.

This evolution comes via AMD’s Strix Halo platform, which pairs high-performance Zen 5 CPU cores with XDNA AI engines to offer industry-leading on-device inference capabilities. The architecture’s capacity to stretch beyond the traditional 4,096-token context window has now reached 256,000 tokens, allowing users to engage in far longer conversations, deep context retrievals, and richer generative tasks without latency spikes or memory concerns. These enhancements significantly raise the bar for what’s possible on a consumer-grade PC in the realm of local AI computing.

While current Strix Halo-based systems remain relatively scarce and command premium pricing — with configurations often surpassing the $2,000 mark the implications are transformative. The technology paves the way for AI assistants and creative tools that can operate completely offline, free from latency, data privacy risks, and recurring API fees.

In practical terms, AMD has empowered developers, researchers, and power users to bring generative AI workloads to the desktop, without needing enterprise-scale GPUs or datacenter access. It also signals a decisive shift in the direction of PC design: more memory, better AI accelerators, and a focus on local-first AI computing.

As AMD continues to push the envelope with the Strix Halo and Ryzen AI MAX+ series, the fusion of gaming, productivity, and AI creation will only deepen giving PC users a glimpse of what the future of intelligent computing could look like today.

What would you use 128B parameter AI models for if you could run them locally on your own PC? Share your ideas below.