AMD Confirms Instinct MI500 For 2027: 2nm Process, CDNA 6, And HBM4E To Push Next Gen AI Rack Performance

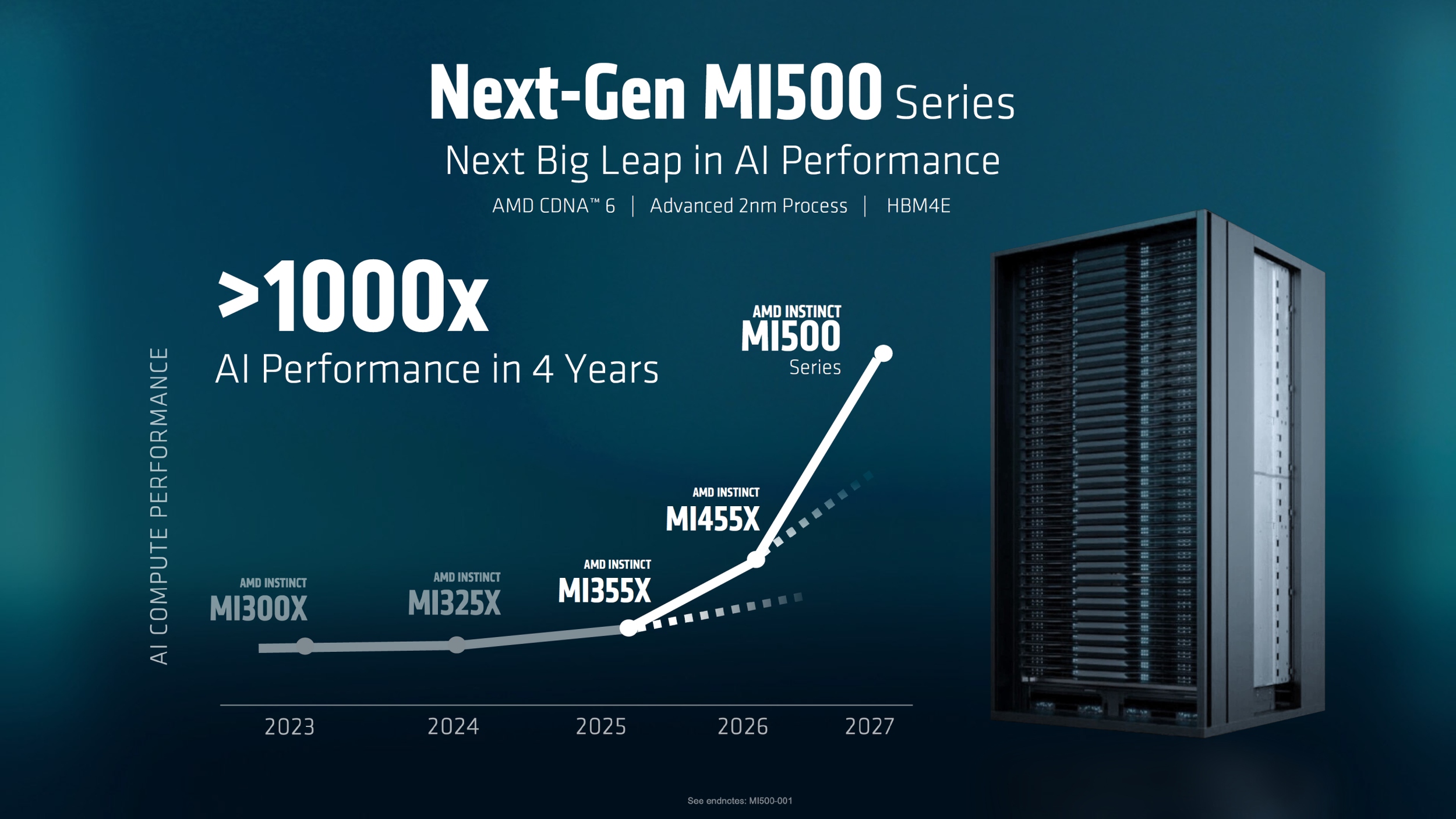

AMD has outlined its next step in the Instinct accelerator roadmap with the Instinct MI500 series scheduled for a 2027 launch, positioning it as the follow up to the MI400 family planned for 2026. The key headline is that MI500 is set to move onto an advanced 2nm manufacturing node while also introducing a new CDNA 6 architecture and upgrading memory to HBM4E, a combination AMD is framing as the foundation for a disruptive uplift in overall AI performance and next generation rack scale deployments.

This roadmap detail also reinforces AMD’s strategic shift toward an annual cadence on the data center and AI front, mirroring the faster iteration tempo the market now expects as NVIDIA pushes its own regular and Ultra rhythm. The competitive context is clear. AI infrastructure is no longer operating on long multi year cycles. Buyers are planning around faster refresh windows, and vendors are trying to lock in platform momentum through sustained architectural and memory bandwidth improvements, not just incremental compute.

From a technology standpoint, MI500 is described as leveraging CDNA 6, while MI400 is tied to CDNA 5. The memory jump to HBM4E matters because AMD is explicitly pointing to bandwidth beyond the 19.6TB per second class figures referenced for HBM4 powered MI400 accelerators. In practice, HBM4E is positioned to increase effective throughput for modern transformer training and inference workloads by reducing memory pressure, increasing usable batch sizes, and improving utilization in bandwidth sensitive scenarios. Even without exact numbers provided here, AMD’s messaging implies that MI500’s performance uplift will not be defined solely by raw FLOPs but by how efficiently the platform can feed compute with faster memory and a more advanced architecture.

There is also an important naming signal in the MI500 discussion. AMD’s roadmap messaging suggests it will keep the CDNA naming for Instinct accelerators rather than switching to UDNA for the data center line, which helps simplify the architecture story for enterprise buyers. Consistent branding matters in the AI server market because procurement and long term platform planning are increasingly done at the architecture and software stack level, not at the single SKU level.

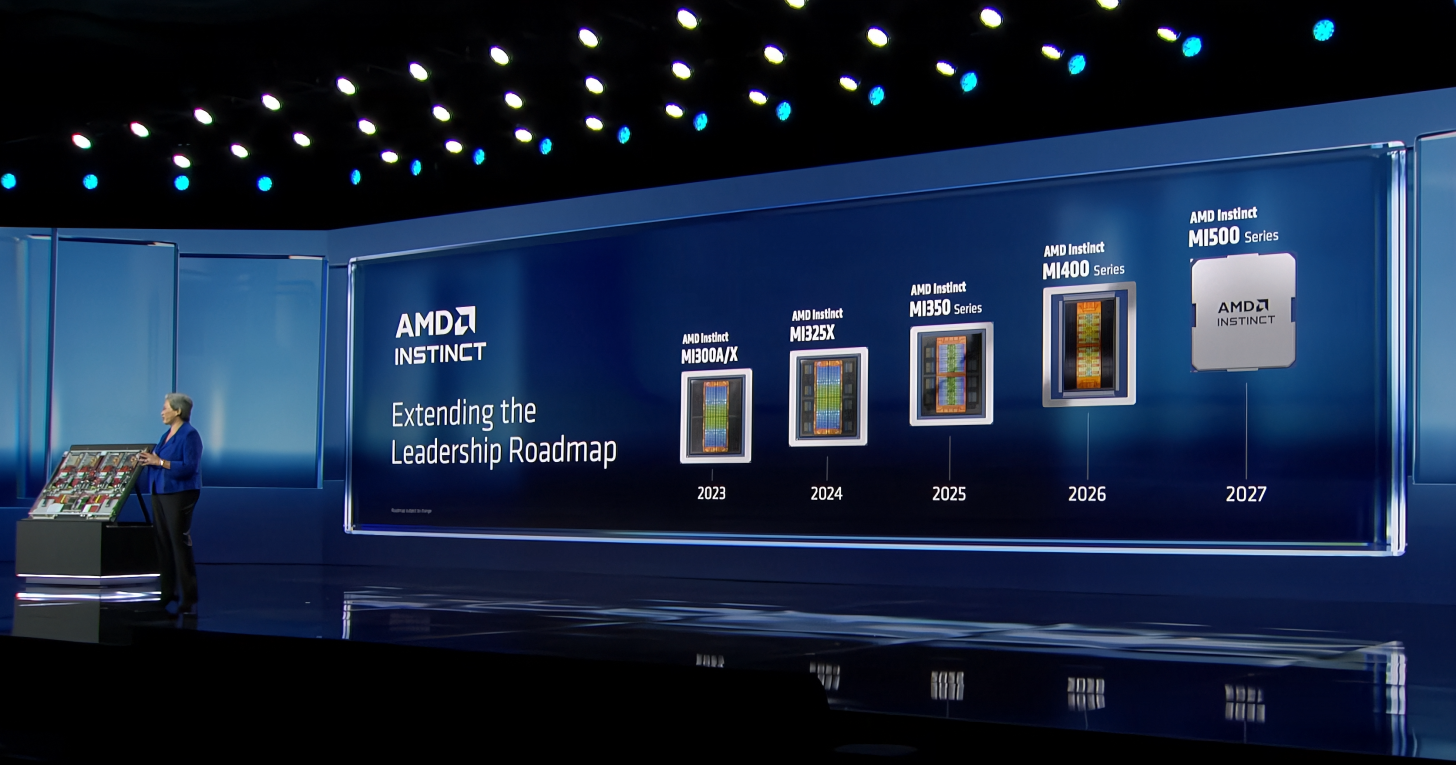

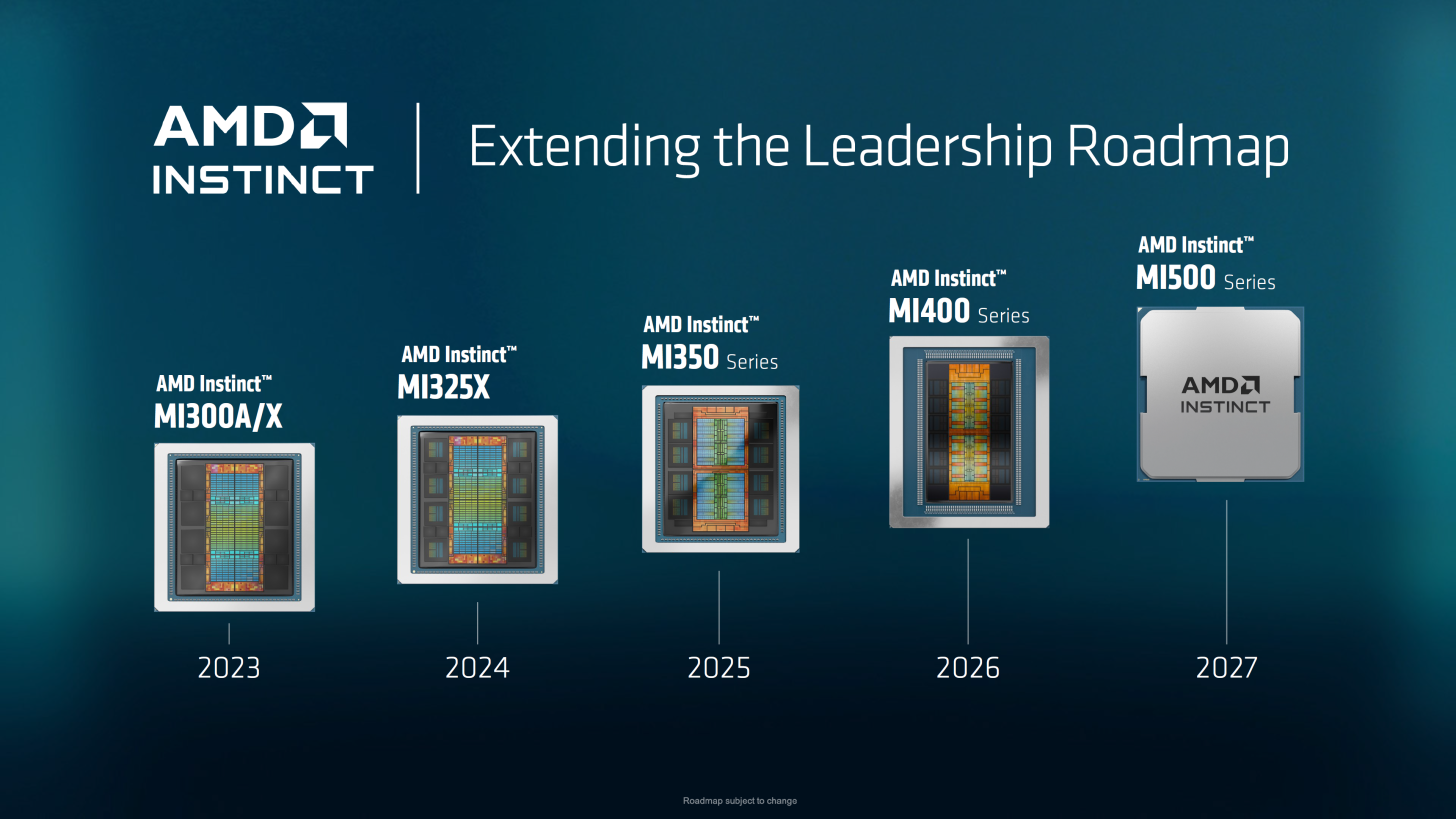

The roadmap slide framing also puts MI500 into a bigger narrative arc. AMD is showing a clear progression from MI300A and MI300X in 2023, to MI325X in 2024, MI350 series in 2025, MI400 series in 2026, and MI500 series in 2027. That kind of year by year roadmap structure is a strong signal that AMD wants customers to view Instinct as a stable, predictable platform where each generation arrives on schedule with measurable jumps in capability. AMD also claims it is on a trajectory toward over 1000x AI performance improvement in four years, which is a bold ambition statement aimed at hyperscalers and AI cloud providers that are building roadmaps around explosive demand growth.

For the market, MI500’s 2027 timing is notable because it implies AMD expects MI400 and its associated rack platforms to still be ramping through 2026 into 2027, while MI500 prepares to take over as the next cycle of platform leadership. This is exactly the pattern we are now seeing across the industry: overlapping ramps, multiple generations shipping concurrently, and customers choosing the best fit based on deployment windows, power and cooling constraints, and software readiness rather than waiting for a single perfect generation.

Do you think AMD’s annual Instinct cadence will help it win more long term AI platform commitments, or will the market still default to the vendor with the strongest full stack software ecosystem even if the hardware roadmap is equally aggressive?