NVIDIA GB300 Blackwell Ultra AI Servers Reportedly See Major Yield Gains After Design Choices, Setting Up a Big 2026 Ramp

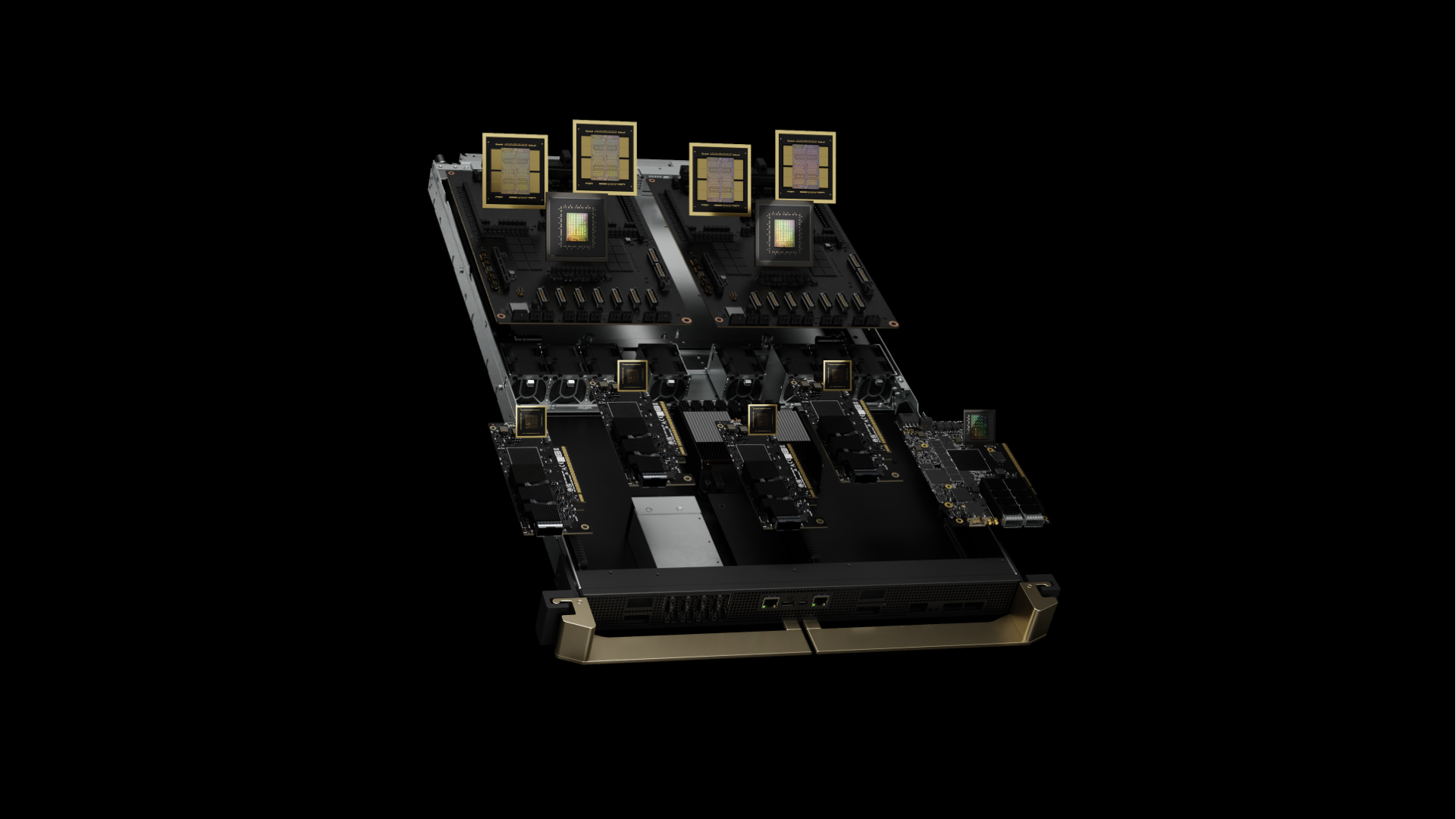

NVIDIA has been running an annual cadence for its AI platform roadmap, and 2025 has been defined by Blackwell scaling from announcement into real volume. After Blackwell Ultra debuted at GTC in Q2 2025, volume ramping accelerated through mid Q3 2025 into Q4 2025, which is why hyperscalers spent much of the year aggressively deploying Blackwell GB200 class server configurations.

Looking into 2026, the spotlight is expected to shift toward Blackwell Ultra systems, and a new report suggests the ramp could be substantial. According to the United News Daily report, GB300 shipments are expected to rise 129% YoY, driven by demand from major AI operators including Microsoft, Amazon, Meta, and other large scale buyers.

The same report also points to credible estimates that Blackwell Ultra could reach as many as 60,000 racks shipped in 2026, a scale that would immediately pressure the entire ecosystem, from board level design maturity to ODM factory throughput and supply chain coordination.

One of the most important signals in the report is not just shipment growth, but the manufacturing readiness behind it. The report indicates GB300 production yield rates have improved meaningfully following design and execution adjustments across the build pipeline. It highlights that manufacturers such as Foxconn have adapted to evolving supply chain requirements, helping stabilize output quality and throughput for high density AI server builds.

A key detail is NVIDIA’s reported decision to stick with the older Bianca board configuration for Blackwell Ultra, rather than pushing forward with the newer Cordelia design due to added complexity. In practical terms, that kind of call can be a yield multiplier. Less design churn, fewer integration variables, and more repeatable assembly processes typically translate into cleaner production, higher first pass success, and faster volume confidence from suppliers.

Blackwell Ultra is expected to retain much of the structural foundation of the earlier Blackwell platform, while pushing meaningful gains through B300 class architectural advancements. The report frames GB300 as the next step in pushing performance standards forward, building on what GB200 enabled in the market.

For the broader ecosystem, GB300 is also positioned as a bridge to Rubin, NVIDIA’s next generation AI rack lineup. The report suggests Rubin could enter the market in H2 2026, with an official showcase planned for GTC in 03 2026. If that timeline holds, NVIDIA’s 2026 story becomes a high tempo platform transition: stabilize Blackwell Ultra at scale, then pivot attention toward Rubin as the next stack wide upgrade cycle.

From a competitive lens, the big strategic takeaway is that yield improvements are not a footnote. They are the difference between a paper launch and a rack level reality. If GB300 is now in a healthier production state, it strengthens NVIDIA’s ability to meet hyperscaler demand while keeping lead times and deployment schedules on track.

What do you think matters more for AI infrastructure in 2026: faster node and architecture gains, or execution wins like higher yields and smoother rack scale manufacturing?