NVIDIA DGX Spark Gains New OTA Optimizations: Up To 2.5x Faster LLM Performance, 8x AI Video Generation

NVIDIA’s DGX Spark is continuing to evolve beyond its original launch positioning as a compact AI mini supercomputer, with NVIDIA now highlighting meaningful real world gains delivered through software enablement and over the air updates. Since the device launched on October 15, DGX Spark has received multiple rounds of optimization, including a recent OTA update focused on performance and stability improvements across popular AI workflows, reinforcing NVIDIA’s strategy of treating the box like a living platform rather than a fixed spec appliance.

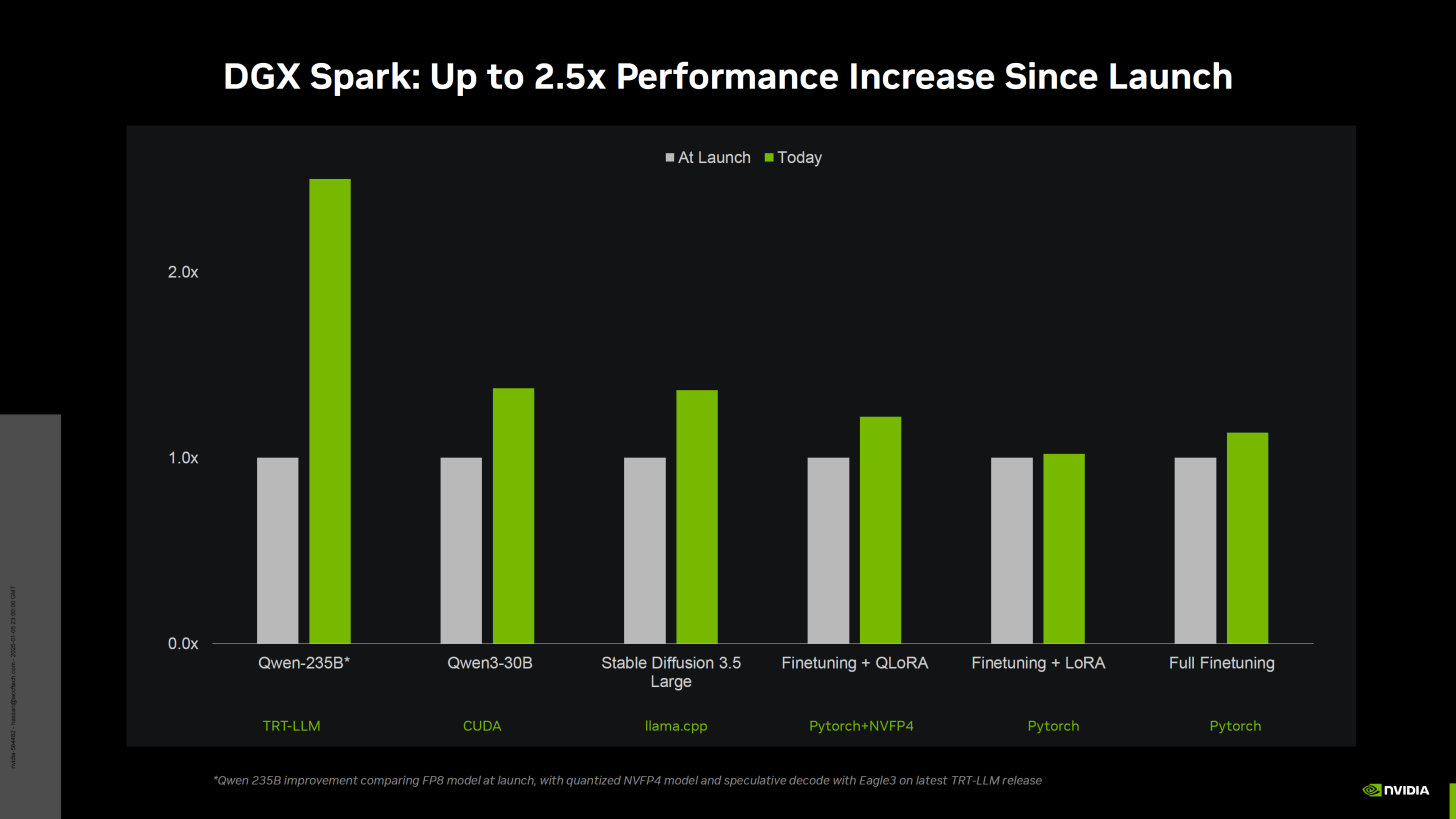

The most headline grabbing performance claim centers on NVFP4 support and the impact it has on large model inference. NVIDIA states that DGX Spark can deliver up to a 2.5x boost in the Qwen 235B model when 2 DGX Spark systems are paired. NVIDIA also calls out CUDA side optimizations delivering up to 2x improvement in Omniverse Isaac Sim. For more mainstream model scenarios, NVIDIA says Qwen3 30B and Stable Diffusion 3.5 see over 30% uplift, and that PyTorch updates contribute additional gains. NVIDIA frames this as performance growth since launch, suggesting that a meaningful part of the DGX Spark value proposition is the compounding effect of CUDA, framework, and precision format optimizations over time.

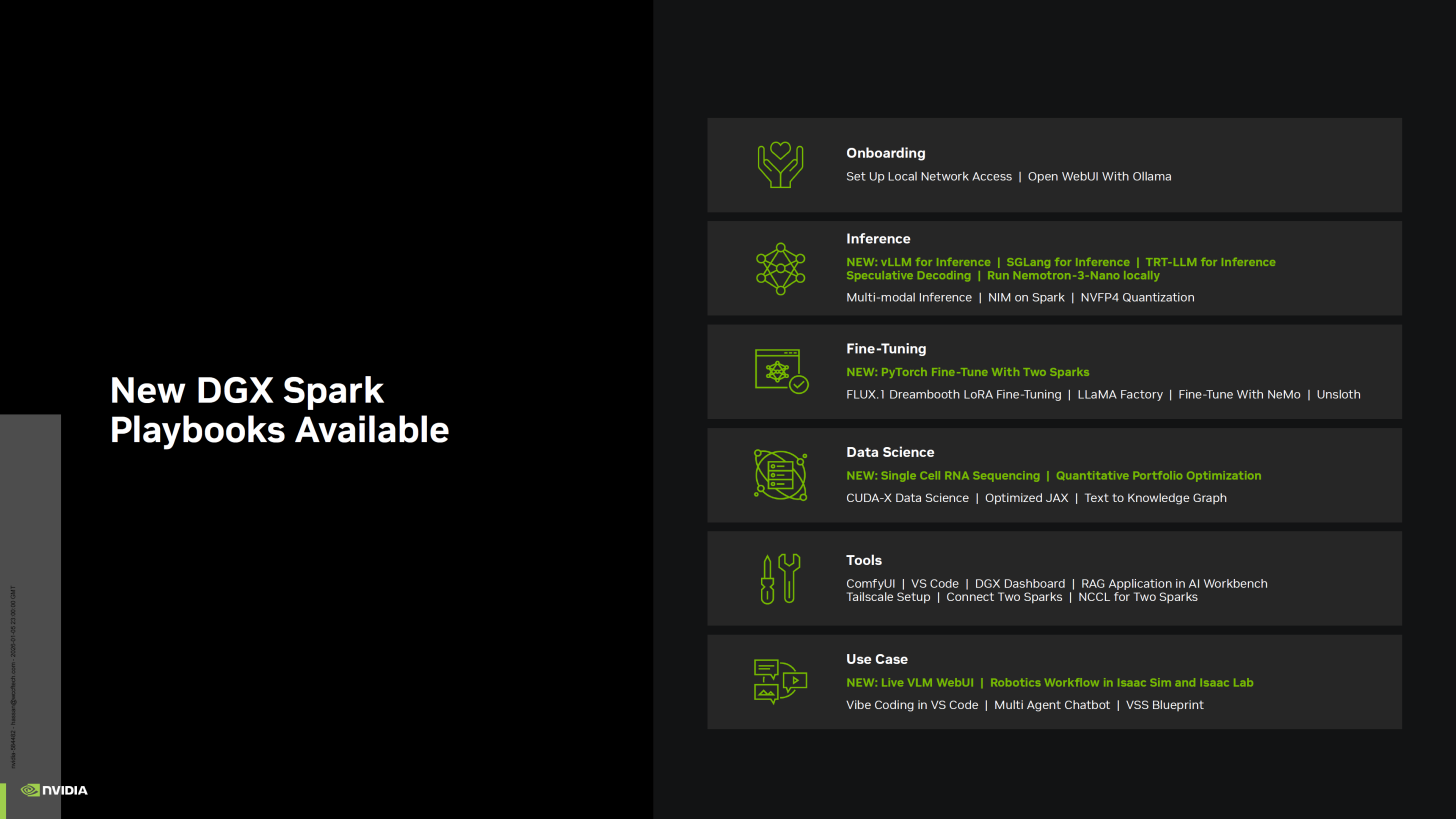

NVIDIA is also pushing a workflow first story through DGX Spark Playbooks, which aim to reduce setup friction and make common tasks more repeatable for developers and creators. NVIDIA says it is adding 7 new playbooks and 4 major updates, covering inference frameworks and use cases such as vLLM for inference, SGLang for inference, TRT LLM speculative decoding, running Nemotron 3 Nano locally, single cell RDA sequencing, quantitative portfolio optimization, live VLM WebUI, and robotics workflows in Isaac Sim and Isaac Lab. The intent is clear: DGX Spark is being marketed as both a local execution engine and a pre validated reference environment so teams can move from idea to working pipeline faster without spending days on dependency hunting and configuration tuning.

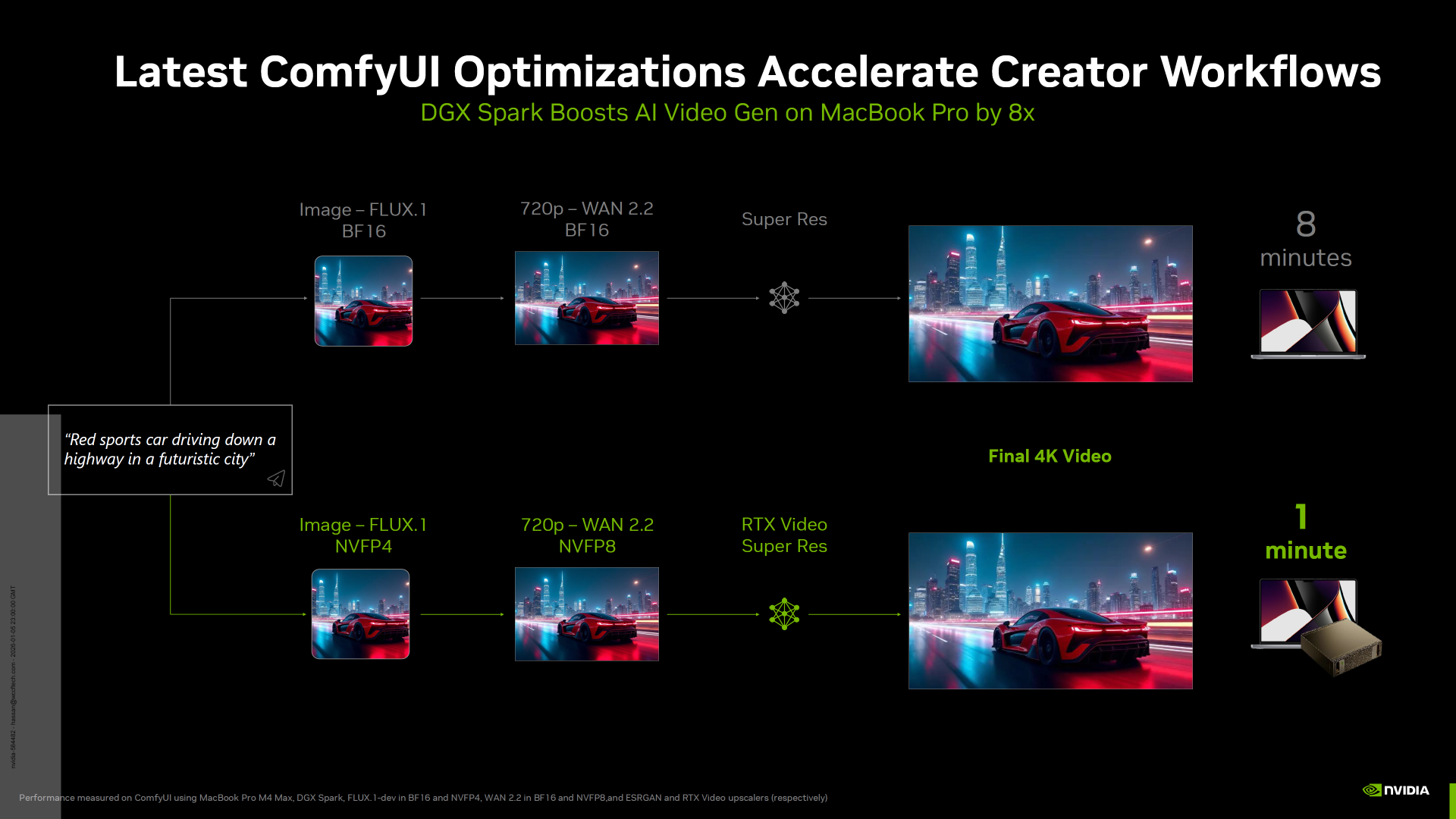

For creators, NVIDIA is leaning into the idea of DGX Spark as an offload accelerator that pairs with an existing laptop or desktop rather than replacing it. One showcased example pairs DGX Spark with a MacBook Pro and claims an 8x speedup in AI video generation by leveraging FP4 and FP8 execution plus RTX Video Super Resolution. In NVIDIA’s demonstration, DGX Spark generates a 4K video in about 1 minute compared to about 8 minutes when running the same workflow on the MacBook Pro alone. This is a compelling narrative for creators who want to keep a familiar editing machine but add a compact AI co processor for heavy generative steps.

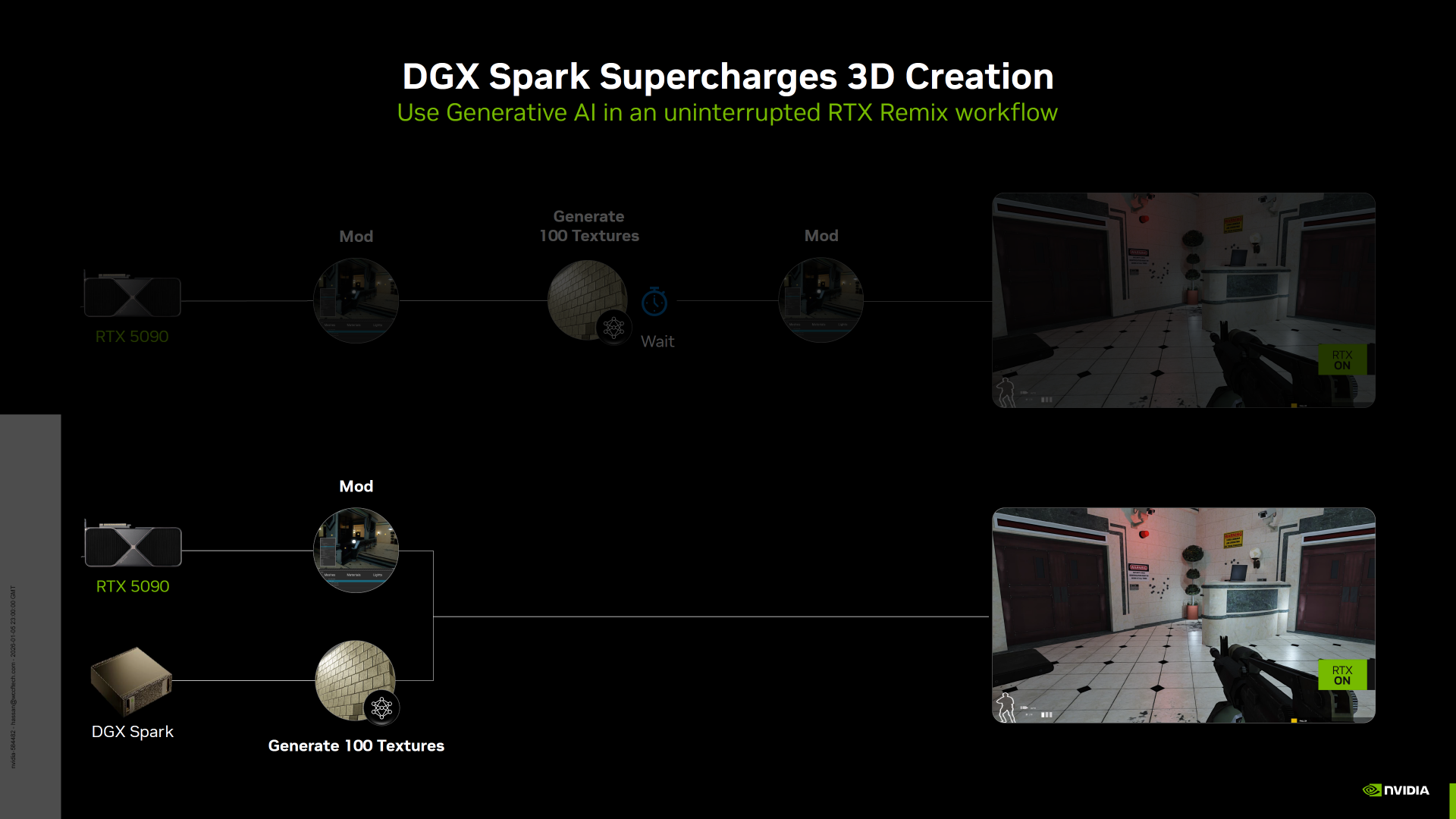

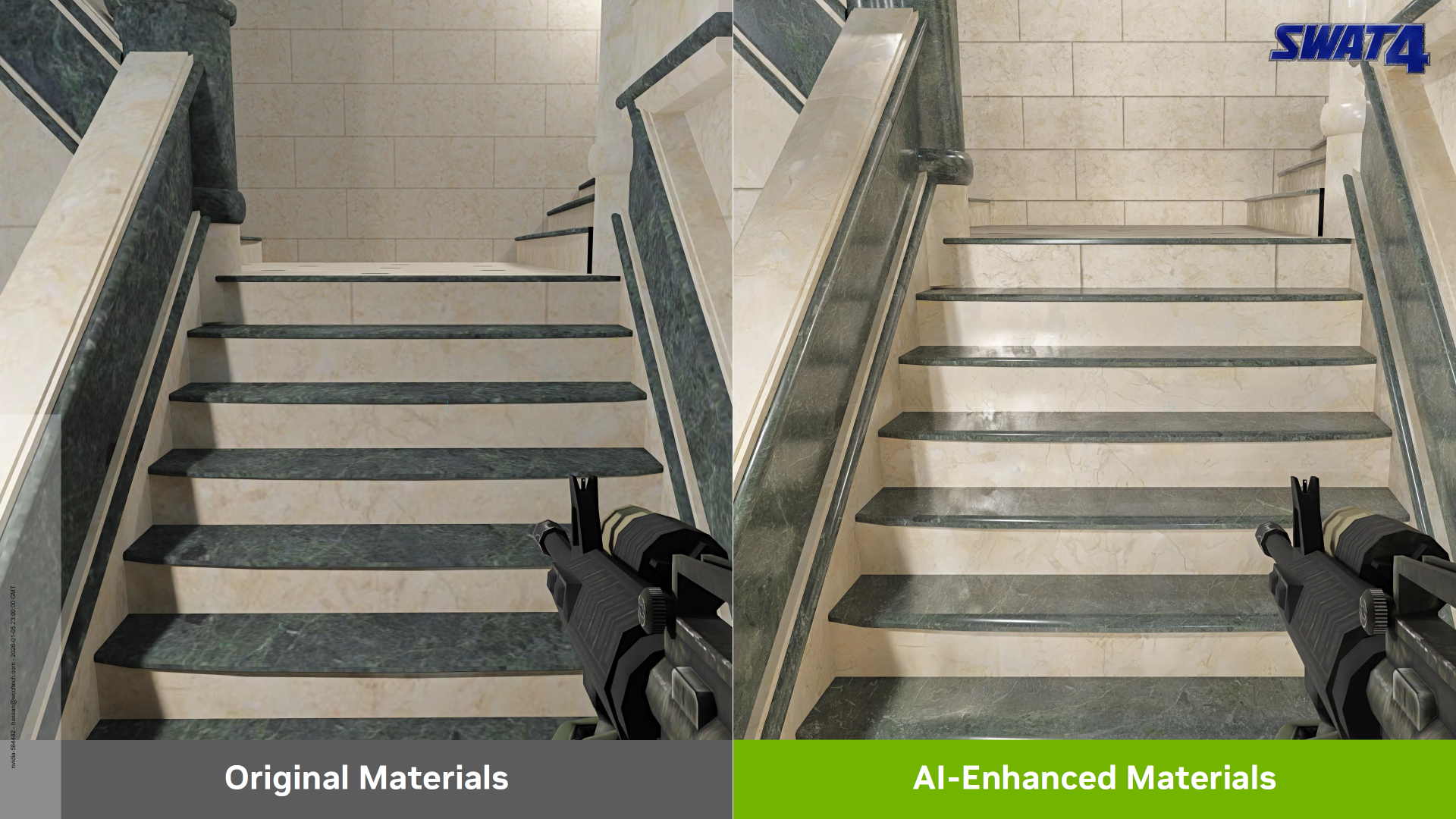

NVIDIA also highlights DGX Spark in 3D creation pipelines such as RTX Remix, positioning it as a companion to a powerful RTX desktop GPU. The concept is to offload compute and memory heavy tasks like texture generation to DGX Spark while the primary RTX 5090 class GPU remains focused on the core rendering and interactive creation workload. This is a very practical split because it can improve iteration speed without forcing the creator to stop working while a model job runs, and NVIDIA emphasizes that DGX Spark systems with 128GB of unified memory can take on larger tasks that would otherwise contend for VRAM and system memory on the primary machine.

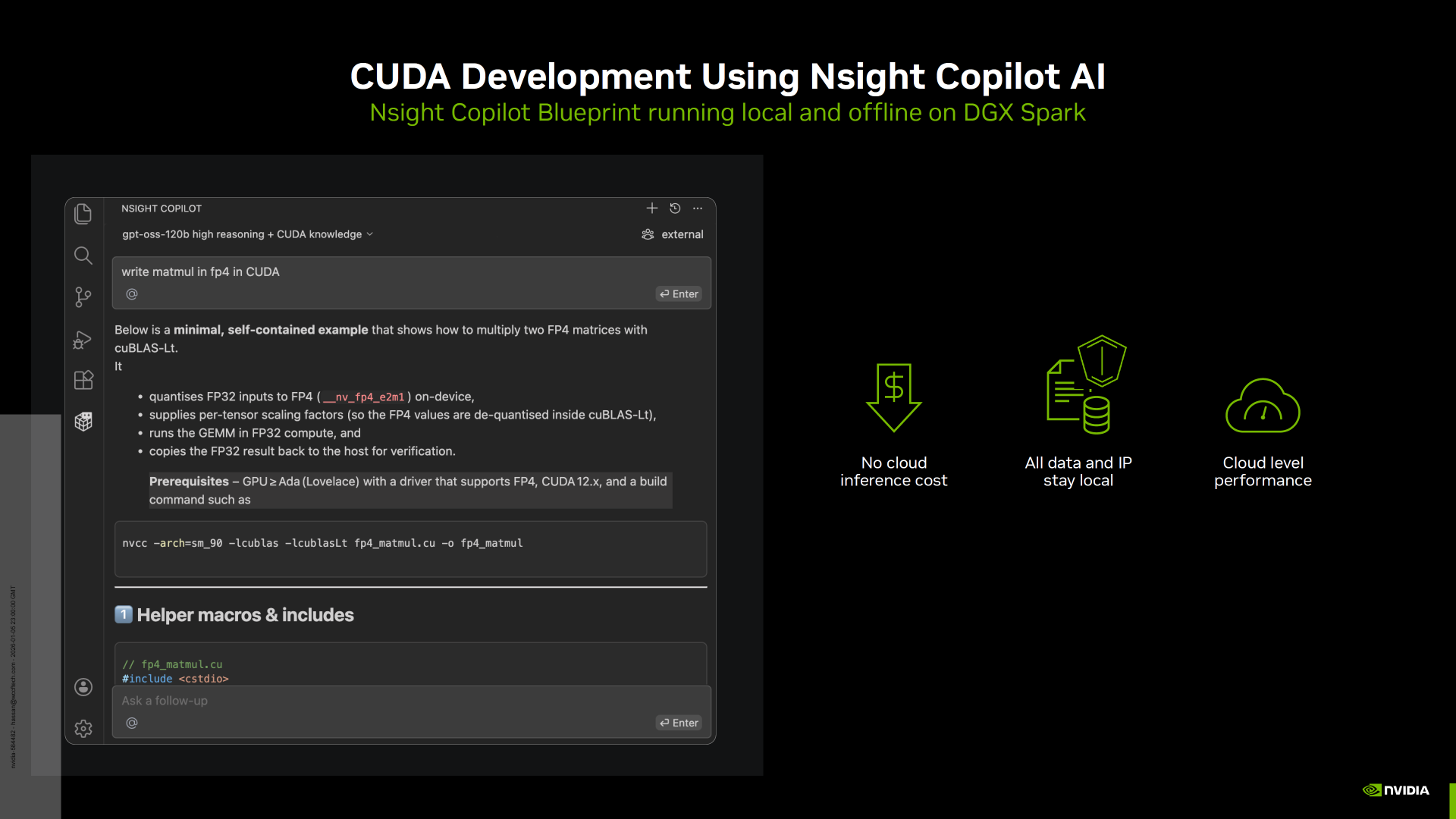

On the developer side, NVIDIA is spotlighting offline CUDA development using Nsight Copilot AI. NVIDIA frames this as a differentiator because running an AI assisted coding agent locally can remove cloud inference costs and keep data and IP fully local. NVIDIA also cites DGX Spark’s 128GB unified memory and 1 PFLOP class compute as the enabler for this local workflow, effectively positioning the device as a private mini AI cloud on your desk.

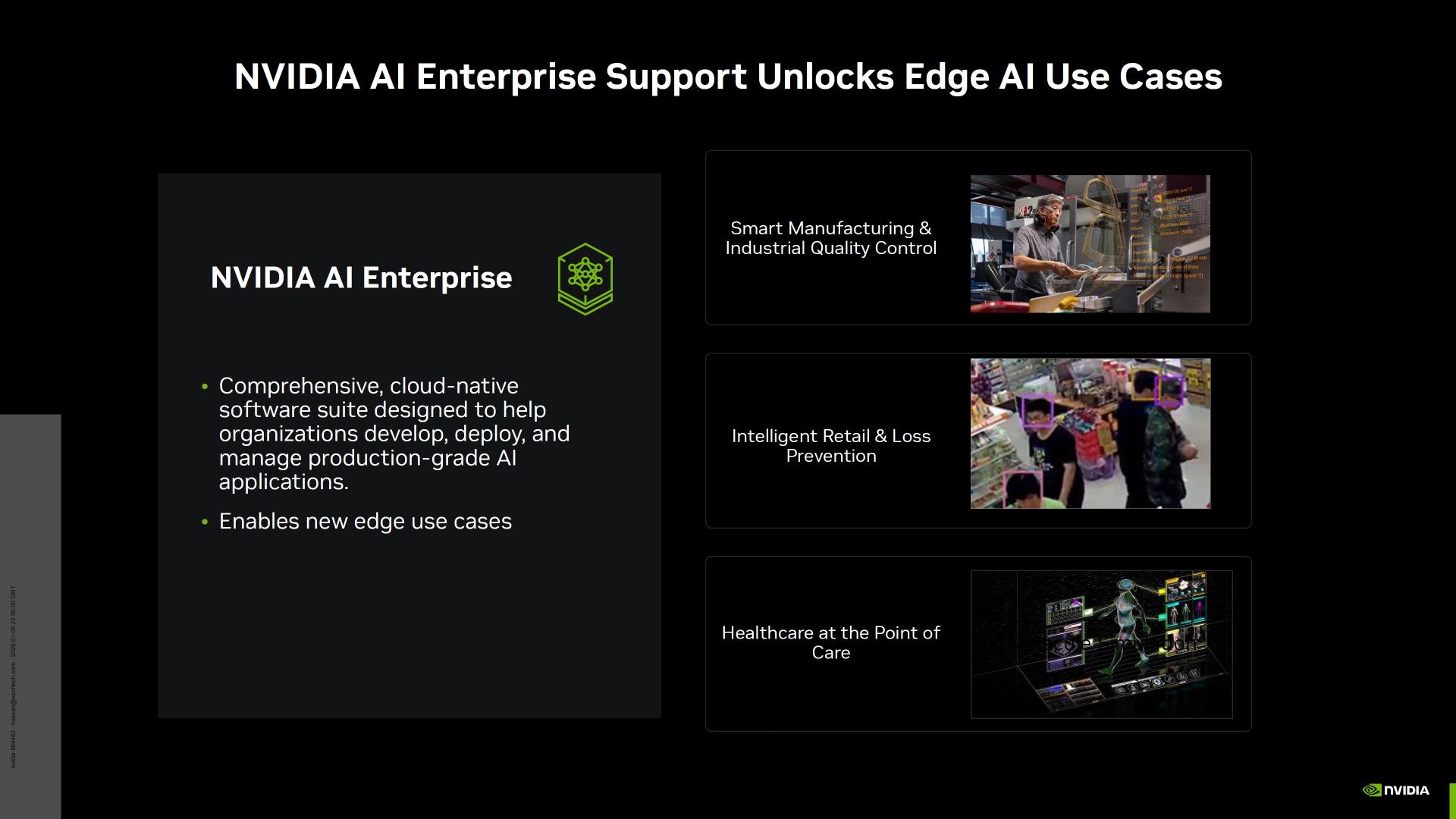

NVIDIA further reinforces DGX Spark’s ecosystem momentum through partnerships and enterprise positioning, highlighting integrations and support paths for edge AI use cases across manufacturing, retail, and healthcare, plus broader tooling and model ecosystem alignment. The message is that DGX Spark is not just aimed at hobbyists. It is meant to fit into professional and enterprise workflows where repeatability, support, and platform stability matter.

Taken together, NVIDIA’s updates show the DGX Spark strategy in full motion. Hardware is the foundation, but software and workflow acceleration are the growth engine. The platform is being optimized for higher precision efficiency with NVFP4, broader framework coverage, and repeatable playbooks that compress developer time to value. For creators and developers who want local execution, strong memory headroom, and a stack that improves over time, DGX Spark is increasingly being positioned as one of the most practical desk side AI machines in the market.

Engagement

Would you use a DGX Spark style AI mini PC as an offload box paired with your main laptop or desktop, or would you rather put the budget into a single bigger workstation that does everything in one machine?