NVIDIA Deepens CoreWeave Partnership With 2 Billion Dollar Investment, Promises Early Vera CPU Access as Jensen Huang Signals Bigger CPU Ambitions

NVIDIA is tightening its grip on the neocloud playbook with a new expansion of its relationship with CoreWeave, positioning the AI infrastructure specialist as an early launch partner for NVIDIA’s upcoming Vera CPUs. The move is notable not only because it reinforces CoreWeave’s role as one of NVIDIA’s closest ecosystem allies, but also because it signals that NVIDIA is actively preparing to compete more aggressively in the server CPU lane, at a moment when CPUs are becoming a real throughput limiter for modern AI stacks.

In NVIDIA’s official announcement, the company says it will commit an additional 2 billion dollars to CoreWeave by purchasing Class A common stock at 87.20 dollars per share. NVIDIA frames the investment as part of strengthening long standing collaboration to accelerate the buildout of AI factories, with CoreWeave targeting 5 gigawatts of AI factory capacity by 2030.

The more strategically interesting layer comes from Jensen Huang’s public comments around what CoreWeave gets out of the deal, and what NVIDIA intends to productize next. In remarks shared via Bloomberg’s Ed Ludlow, Huang said CoreWeave will be first to stand up Vera CPUs, and emphasized that Vera will be offered as a standalone CPU infrastructure option. That standalone phrasing matters because it implies NVIDIA is not limiting Vera to bundled rack scale pairings only, but is instead aiming for broader adoption as a CPU platform choice inside AI data centers, including environments that may not be ready to commit to a full end to end NVIDIA rack solution.

From a market execution standpoint, this reads like NVIDIA acknowledging two realities at once. First, as agentic AI workloads scale, the CPU side of the host node is increasingly a bottleneck, especially when the goal is to maximize GPU utilization and keep data movement and orchestration overhead from eating into total performance per dollar. Second, giving customers the option to deploy Vera as a standalone CPU layer creates a stepping stone adoption path, meaning enterprises can standardize on NVIDIA CPUs for certain workloads even before they fully standardize the entire rack around NVIDIA’s broader platform.

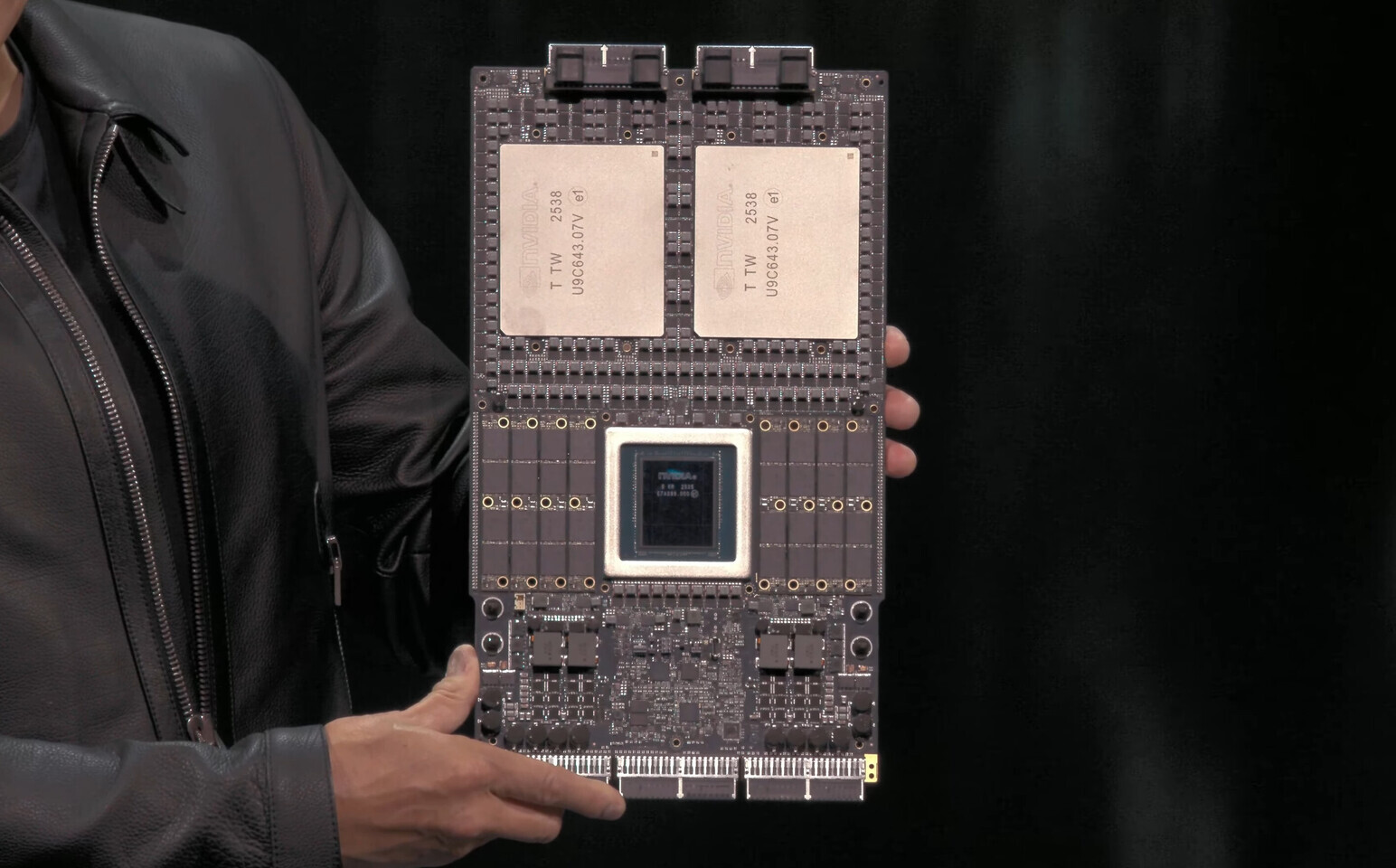

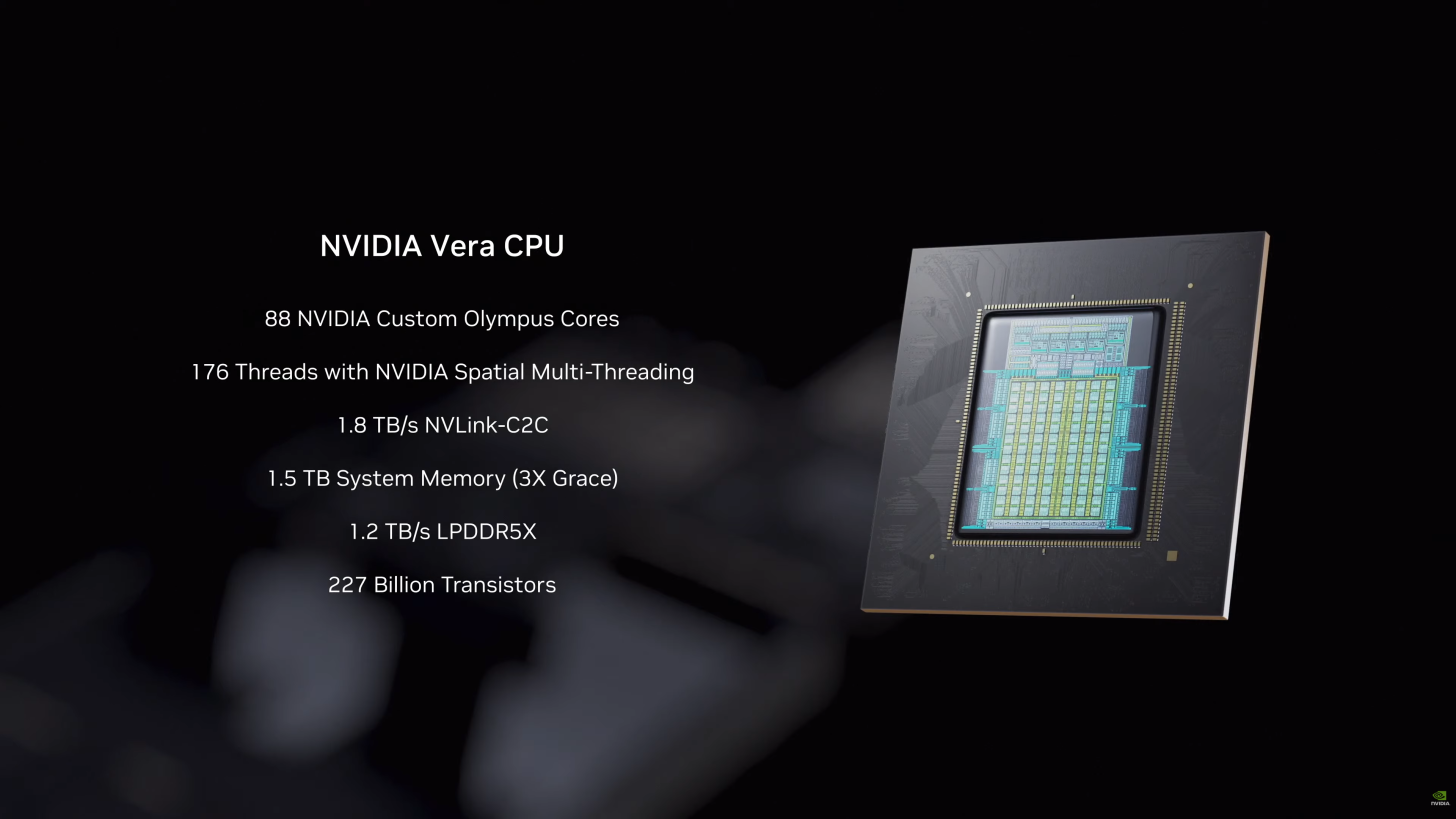

On paper, NVIDIA is positioning Vera as a substantial generational leap. The specifications referenced in the reporting paint Vera as an ARM based design built around a next generation custom architecture codenamed Olympus, featuring 88 cores and 176 threads enabled through NVIDIA Spatial Multi Threading. The platform is also described with 1.8 TB per second NVLink C2C coherent memory interconnect, up to 1.5 TB of system memory, 1.2 TB per second memory bandwidth via SOCAMM LPDDR5X, and rack scale confidential compute. Taken together, this is NVIDIA aiming squarely at the AI host node problem, not just with more CPU cores, but with interconnect and memory architecture decisions designed to keep accelerators fed and efficient.

Jensen Huang also teased that NVIDIA has not announced its CPU design wins yet, but that there will be many. If that claim materializes, it would indicate NVIDIA intends to move beyond being the default accelerator vendor and into being a more complete infrastructure anchor, where the CPU platform is part of the same strategic lock in story that CUDA enabled for GPUs. The timing is also telling, because it arrives as the industry watches how hyperscalers and neoclouds increasingly control allocation, scheduling, and distribution of next wave compute, effectively acting as the storefront for AI capacity.

The big question is whether this is primarily a CoreWeave acceleration move, or the opening chapter of NVIDIA pushing into broader CPU mindshare across the data center. The answer likely depends on how quickly Vera can scale from early partner deployments into repeatable design wins across major operators, and whether customers see real operational advantages in pairing NVIDIA CPUs with NVIDIA GPUs beyond pure performance benchmarks.

Do you see NVIDIA’s standalone Vera CPU strategy as a genuine attempt to dominate the server CPU market, or mainly as a platform lever to keep GPU led AI factories running at peak efficiency?