NVIDIA Announces Nemotron 3 Family of Open AI Models With Nano, Super, and Ultra Variants

NVIDIA has officially unveiled the Nemotron 3 family of open artificial intelligence models, marking a major evolution in its open model strategy and a significant leap forward compared to the previous Nemotron 2 generation. According to the official NVIDIA press release, Nemotron 3 is designed to power transparent, efficient, and highly specialized agentic AI systems across a wide range of industries, from enterprise software and cybersecurity to manufacturing, media, and communications.

The Nemotron 3 lineup introduces a new hybrid latent mixture of experts architecture that enables scalable multi agent AI systems with significantly improved efficiency and accuracy. The family is offered in three sizes Nano, Super, and Ultra, allowing developers and enterprises to right size their AI deployments based on workload complexity, latency requirements, and cost constraints.

NVIDIA positions Nemotron 3 as a foundational pillar of its broader sovereign AI strategy. Organizations across Europe, South Korea, and other regions are adopting open and transparent models like Nemotron to build AI systems aligned with local data policies, regulatory frameworks, and cultural values. This approach allows governments and enterprises to retain control over their AI stacks without sacrificing performance or scalability.

Early adoption of Nemotron models is already underway. Companies including Accenture, Cadence, CrowdStrike, Cursor, Deloitte, EY, Oracle Cloud Infrastructure, Palantir, Perplexity, ServiceNow, Siemens, and Zoom are integrating Nemotron into AI workflows spanning cybersecurity, software development, enterprise automation, and industrial operations. In parallel, startups backed by Mayfield are exploring Nemotron 3 to develop AI teammates that enhance human AI collaboration from early prototyping through enterprise scale deployment.

The Nemotron 3 family consists of three distinct model tiers. Nemotron 3 Nano is a 30 billion parameter model with 3 billion active parameters, optimized for highly efficient and targeted tasks. Nemotron 3 Super scales to approximately 100 billion parameters with 10 billion active parameters and is designed for complex multi agent reasoning workloads. Nemotron 3 Ultra represents the flagship reasoning engine, featuring roughly 500 billion parameters with 50 billion active, targeting deep research, planning, and large scale agent orchestration.

Available today, Nemotron 3 Nano is NVIDIA’s most compute cost efficient open model to date. It is optimized for workloads such as software debugging, content summarization, AI assistants, and information retrieval, delivering strong reasoning performance at low inference cost. The hybrid MoE design enables up to four times higher token throughput compared with Nemotron 2 Nano while reducing reasoning token generation by up to sixty percent. This directly translates into lower inference cost and faster response times.

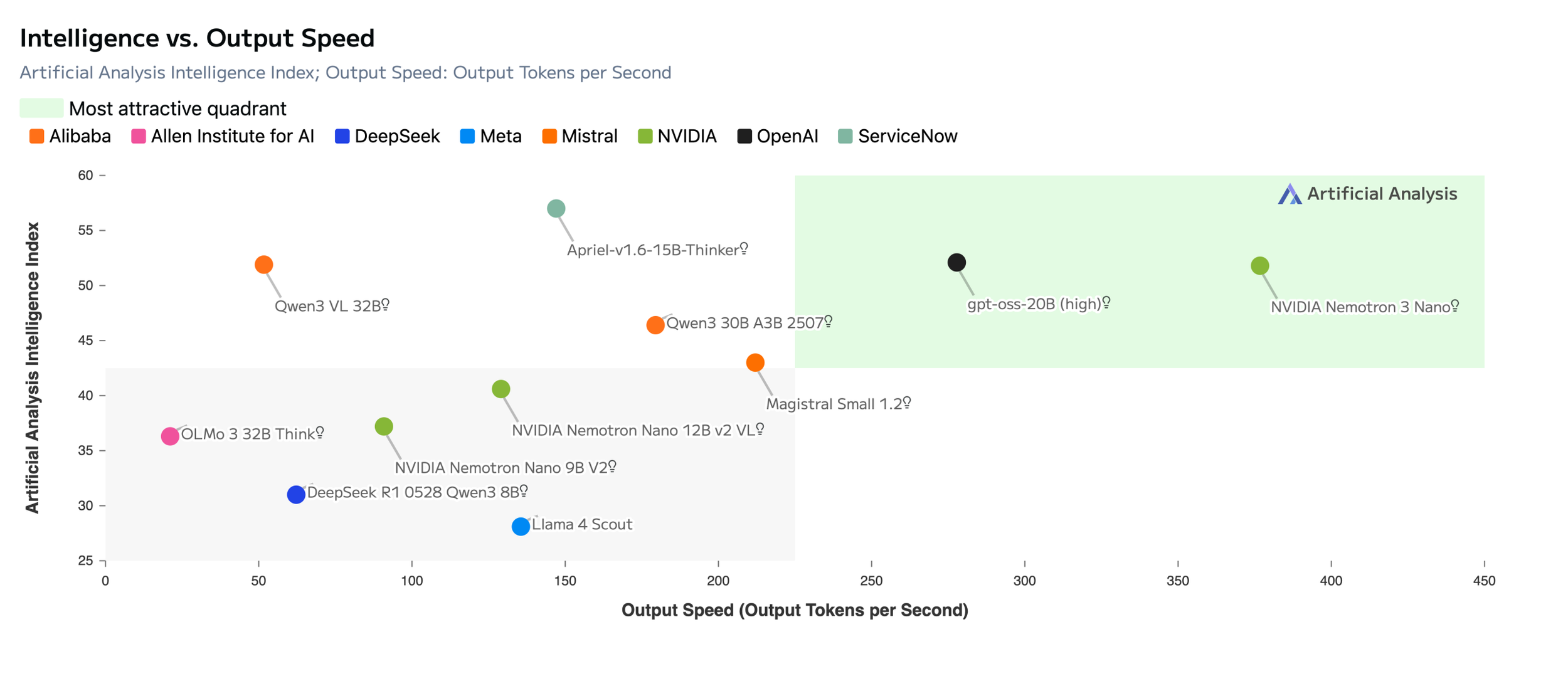

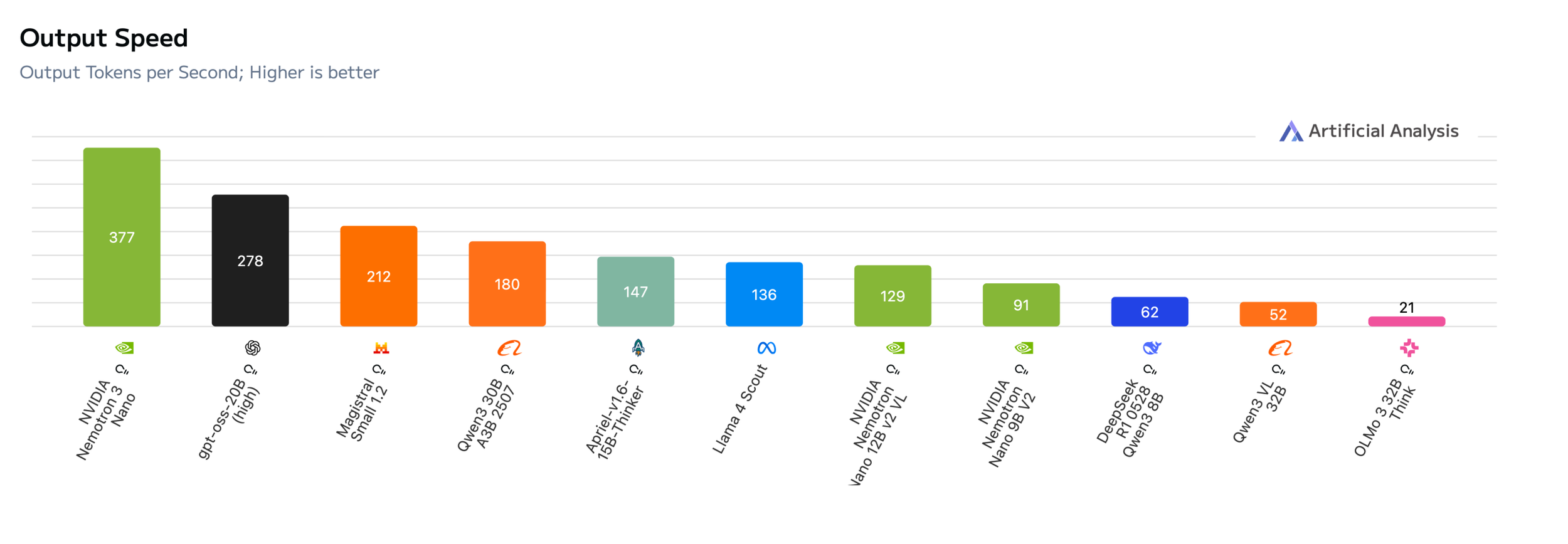

Nemotron 3 Nano also introduces a one million token context window, allowing it to retain significantly more information across long multistep tasks. This extended memory improves accuracy, contextual understanding, and consistency when handling complex workflows. Independent benchmarking from Artificial Analysis ranked Nemotron 3 Nano as the most open and efficient model in its size class, with leading accuracy and output speed. Performance measurements show Nemotron 3 Nano achieving up to 377 tokens per second, placing it among the fastest open models currently available.

Nemotron 3 Super and Nemotron 3 Ultra further extend these capabilities for demanding enterprise use cases. Both models leverage NVIDIA’s ultra efficient four bit NVFP4 training format on the NVIDIA Blackwell architecture. This approach significantly reduces memory requirements and accelerates training while maintaining accuracy comparable to higher precision formats. As a result, organizations can train and deploy substantially larger reasoning models on existing infrastructure without prohibitive cost increases.

The flexibility of the Nemotron 3 family allows developers to scale from dozens to hundreds of AI agents within a single workflow. This makes the platform particularly well suited for advanced agentic systems that require long horizon reasoning, coordination between multiple agents, and low latency execution.

From an availability perspective, Nemotron 3 Nano is accessible today through multiple channels. It is hosted on Hugging Face and supported by inference providers including Baseten, Deepinfra, Fireworks, FriendliAI, OpenRouter, and Together AI. Nemotron is also integrated into enterprise AI platforms such as Couchbase, DataRobot, H2O.ai, JFrog, Lambda, and UiPath. For public cloud users, Nemotron 3 Nano will be available on AWS via Amazon Bedrock in a serverless configuration, with additional support coming to Google Cloud, Coreweave, Nebius, Nscale, and Yotta.

For organizations requiring maximum privacy and control, Nemotron 3 Nano is offered as an NVIDIA NIM microservice, enabling secure and scalable deployment on NVIDIA accelerated infrastructure. NVIDIA confirmed that Nemotron 3 Super and Nemotron 3 Ultra are expected to become available in the first half of 2026.

With Nemotron 3, NVIDIA is positioning itself at the center of the open and sovereign AI movement, offering models that combine openness, efficiency, and enterprise grade performance. The shift toward right sized agentic AI models reflects a broader industry trend where raw parameter count matters less than efficiency, scalability, and long horizon reasoning capability.

Do you see open models like Nemotron 3 becoming the foundation for enterprise AI over closed systems, or will proprietary models continue to dominate large scale deployments?