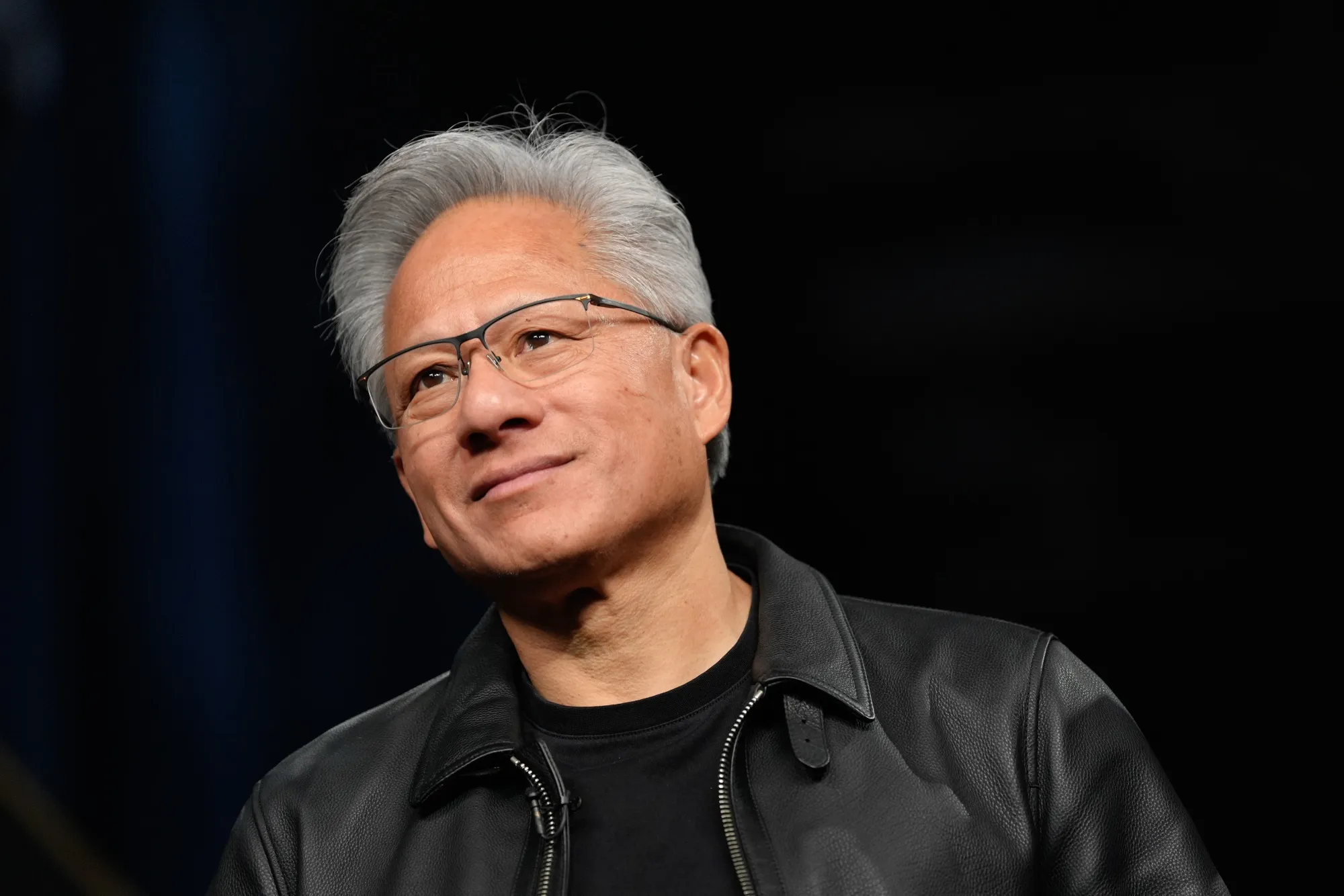

Jensen Huang Calls Big Tech AI CapEx Surge Sustainable, Rejects Overspending Narrative

NVIDIA CEO Jensen Huang is pushing back on the growing skepticism around Big Tech’s exploding AI capital expenditures, framing the latest wave of infrastructure spending as sustainable and structurally necessary rather than reckless overspending. In recent remarks, Huang argued that the industry is in a once in a generation infrastructure buildout, driven by demand that is not speculative, but anchored in real world usage and expanding monetization across the AI stack. His comments, captured in this video on YouTube, land as investors and analysts debate whether the latest surge in AI related spend is setting up the next productivity revolution or repeating a familiar boom and bust cycle.

Huang’s core claim is that AI has moved rapidly from novelty to utility, and that utility is now translating into revenue. He points to what he describes as an inflection point where AI is not simply generating interest, but generating profitable tokens and meaningful business outcomes. In his words, demand is sky high for a fundamental reason, and that reason is the shift from AI as a curiosity into AI as an economic engine. He also highlights that leading AI labs are already making significant money, using that as evidence that the spending is tied to a real monetization layer, not just experimentation.

From Huang’s perspective, the deeper story is not just about data centers and GPUs. It is about a structural shift in what software is and what software does. He describes a new era where software is not merely a tool, but something that uses tools. This is a key narrative reframing because it positions modern AI systems as active operators inside workflows rather than passive interfaces. In practical terms, it is the difference between software that waits for human input and software that can execute multi step tasks, orchestrate systems, and deliver outcomes. That framing supports his argument that the industry is chasing what he calls the largest software opportunity in history.

The skepticism, however, is not going away. The concern in financial circles is not only the size of capital commitments, but the uncertainty of return, specifically whether today’s scale of spend will produce results that justify investor expectations. Critics are drawing analogies to prior tech cycles where infrastructure was built ahead of monetization, including the dark fiber parallels often referenced when discussing the dot com era. In that view, AI spending could overshoot demand, creating excess capacity before mass adoption catches up.

Still, Huang’s stance is that the trajectory is different this time because the implementation layer is maturing quickly. In recent months, the market has seen rapid growth in agentic AI tooling and production use cases, where AI systems do more than chat and instead build, deploy, test, and operate. Supporters of the spending wave argue this resembles the early cloud era, where the winners were the companies that built capacity and platforms early, then captured the long tail of adoption once businesses fully committed.

The reality for 2026 is that the debate is now less about whether AI matters, and more about timing and diffusion. If AI continues spreading into mainstream workflows, consumer products, and enterprise operations at scale, then today’s CapEx surge may look like the foundation of a new computing cycle. If adoption stalls or monetization fails to expand beyond a small set of winners, then the overspending argument gains oxygen. Either way, Huang is drawing a hard line: the spending is sustainable, the demand is real, and the software opportunity is massive.

Do you think the AI CapEx surge is building the next cloud level platform shift, or does it look more like a capacity bubble that will take years to monetize?