NVIDIA Says It Is Well Positioned For The DRAM Supercycle, But Gaming GPUs Could Still Get Squeezed By Memory Shortages

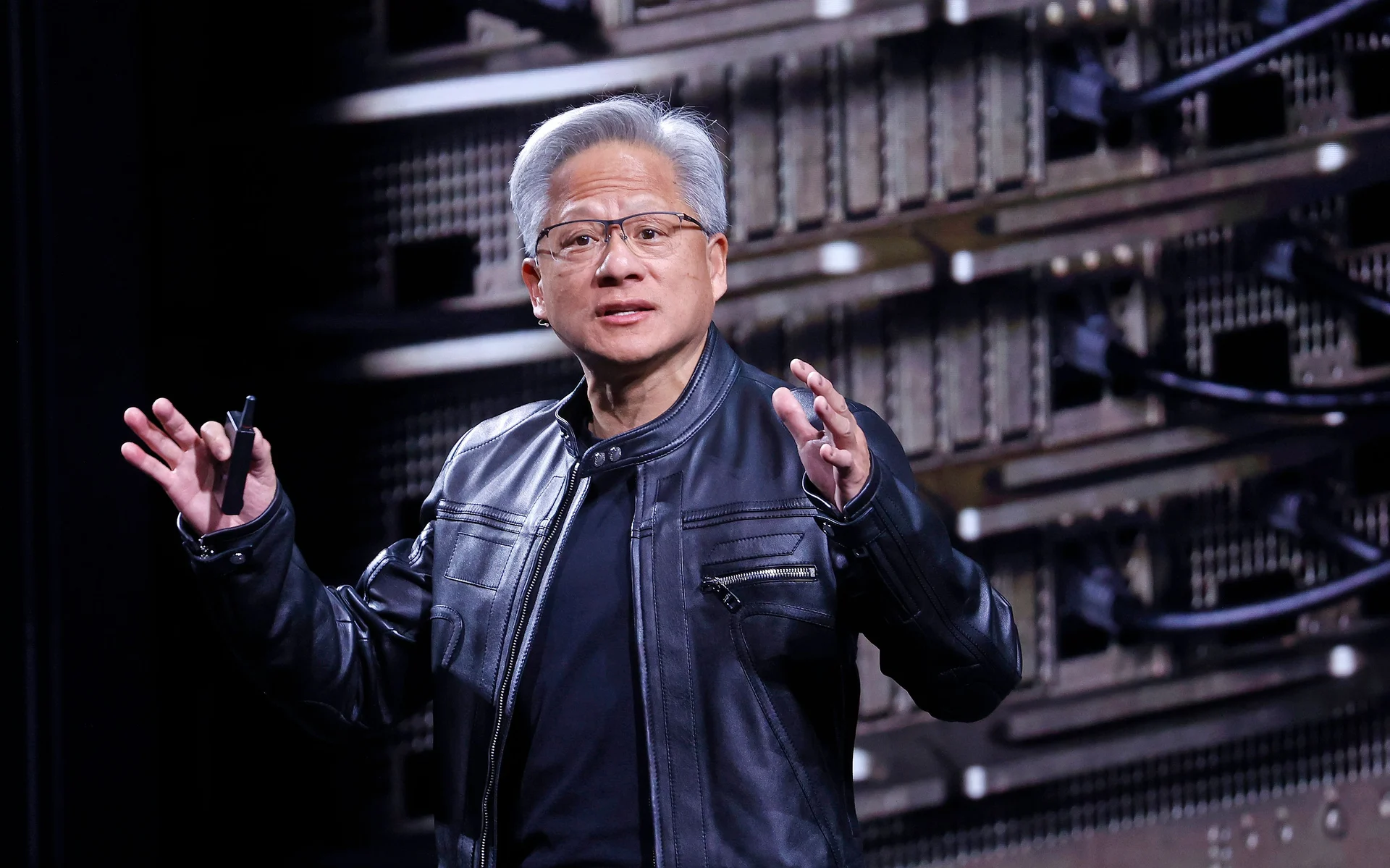

Memory shortages were a defining hardware storyline of 2025, and supply constraints are carrying into 2026 with even greater intensity as AI infrastructure continues to absorb a growing share of DRAM and advanced packaging capacity. During a CES Financial Analyst Q and A session with NVIDIA CEO Jensen Huang and CFO Colette Cress, an analyst asked how NVIDIA is being affected by the DRAM supercycle, including pricing pressure, supply volumes, and available inventory. In the Q and A transcript shared by the community through the Jukan transcript, NVIDIA’s leadership framed the company as structurally advantaged because it scaled early, planned capacity ramps in advance, and invested directly with partners through prepayments to help expand supply.

NVIDIA’s core argument is that long standing supplier relationships and proactive capital commitments create a buffer in tight supply conditions. The company also highlighted a strategic operational difference versus most of the industry, emphasizing that it directly purchases DRAM at global scale and then transforms that memory into highly complex CoWoS class supercomputers. NVIDIA described the end to end supply chain integration as a plumbing advantage, because it is not only about securing chips but also about coordinating the packaging, interconnect, and systems assembly required to actually ship AI platforms at volume. In short, NVIDIA is signaling that it has built a supply chain playbook to reduce the risk of being bottlenecked when memory becomes scarce.

That posture aligns with the broader reality that NVIDIA has been in direct contact with memory suppliers for years as its AI footprint expanded. From an execution perspective, entering long term agreements with memory manufacturers is essential when product ramps are measured in rack scale deployments rather than consumer unit bursts. NVIDIA cannot afford delays when data center platforms are the revenue engine, and the company’s comments at CES suggest it is prioritizing continuity of supply for AI systems even as DRAM pricing and allocation remain volatile.

The key tension is what happens on the consumer side. Even if AI remains protected by long term planning and deep supplier coordination, gamers can still feel the pinch when advanced memory supply tightens. The logic is simple: if inventory and allocation decisions must be made during a shortage, platforms that drive the largest and most strategic revenue streams are likely to be protected first. That means consumer GPU refresh plans can become more sensitive to memory availability, memory module configurations, and component allocation timing. In practical terms, this is how a market can end up seeing delayed launches, constrained availability, or product mix adjustments that favor parts with more accessible memory sourcing.

Adding more pressure, NVIDIA is also promoting new memory adjacent platform concepts that can further increase the importance of DRAM and memory tiering in AI systems. Jensen Huang recently showcased what NVIDIA calls the Inference Context Memory Storage Platform, positioning it as a new class of AI focused storage designed around inference context and long term memory workflows. Regardless of how quickly such platforms scale, the strategic direction is clear: AI is not only consuming more compute, it is also consuming more memory capacity and more sophisticated memory hierarchy design, which reinforces why DRAM supply dynamics matter across the entire ecosystem.

The bottom line is that NVIDIA is projecting confidence on the AI supply chain front, while the consumer market remains more exposed to the same memory constraints that defined 2025. For gamers and PC builders, the risk is not that the industry runs out of GPUs, but that the most desirable SKUs, the most cost efficient configurations, or the most aggressive refresh cadence becomes harder to sustain when memory is the gating factor.

If memory shortages continue through 2026, would you rather see GPU makers prioritize stable availability at realistic prices, or push higher tier models first even if mainstream gamers face longer waits?