Chinese AI Chip Startup Iluvatar CoreX Unveils Roadmap Claiming Vera Rubin Level Parity by 2027

A new claim making the rounds in the China AI hardware ecosystem is turning heads, not because it is subtle, but because it is aggressively ambitious. Chinese AI chip startup Iluvatar CoreX has reportedly shared a roadmap that aims to go head to head with NVIDIA’s current and upcoming data center accelerators, with targets that include competing with Blackwell in 2026 and reaching performance parity with NVIDIA Vera Rubin by 2027.

According to a report from MyDrivers, Iluvatar CoreX is positioning itself as an HPC focused company, differentiating from domestic peers that often try to span both consumer graphics and AI acceleration. The report frames Iluvatar CoreX as betting on a proprietary architecture under its Tianshu Zhixin lineup, with a roadmap that is intended to scale rapidly across multiple generations. The core message is simple and bold: compete with Blackwell this year, then match Rubin next year, an objective that would normally take far more than a single product cadence for most teams, even with a deep ecosystem behind them.

Context matters here. China is under heavy pressure to secure compute capacity as AI demand accelerates, and that pressure has created multiple parallel paths. Domestic chip development is one lane, while other lanes include rental access to overseas infrastructure and, as frequently discussed in industry circles, gray market sourcing. Against this backdrop, a steady stream of vendors have attempted to fill the gap for hyperscalers and large enterprise buyers, including familiar names like Huawei, Moore Threads, and BirenTech. Iluvatar CoreX is entering that same arena, but with messaging that implies it wants to compete at the very top of the stack, not just provide a workable alternative for constrained procurement conditions.

What the public information suggests so far is that Iluvatar CoreX already claims products that map to older NVIDIA class performance tiers. Coverage referenced by TrendForce notes TianGai 100 and TianGai 150 as examples of solutions the company positions around Ampere era equivalence. However, as is often the case with emerging accelerators in this region, details that determine real competitiveness remain thin in public view, including deployment scale, software maturity, kernel level optimization depth, framework support consistency, and real world performance in production workloads rather than controlled benchmarks.

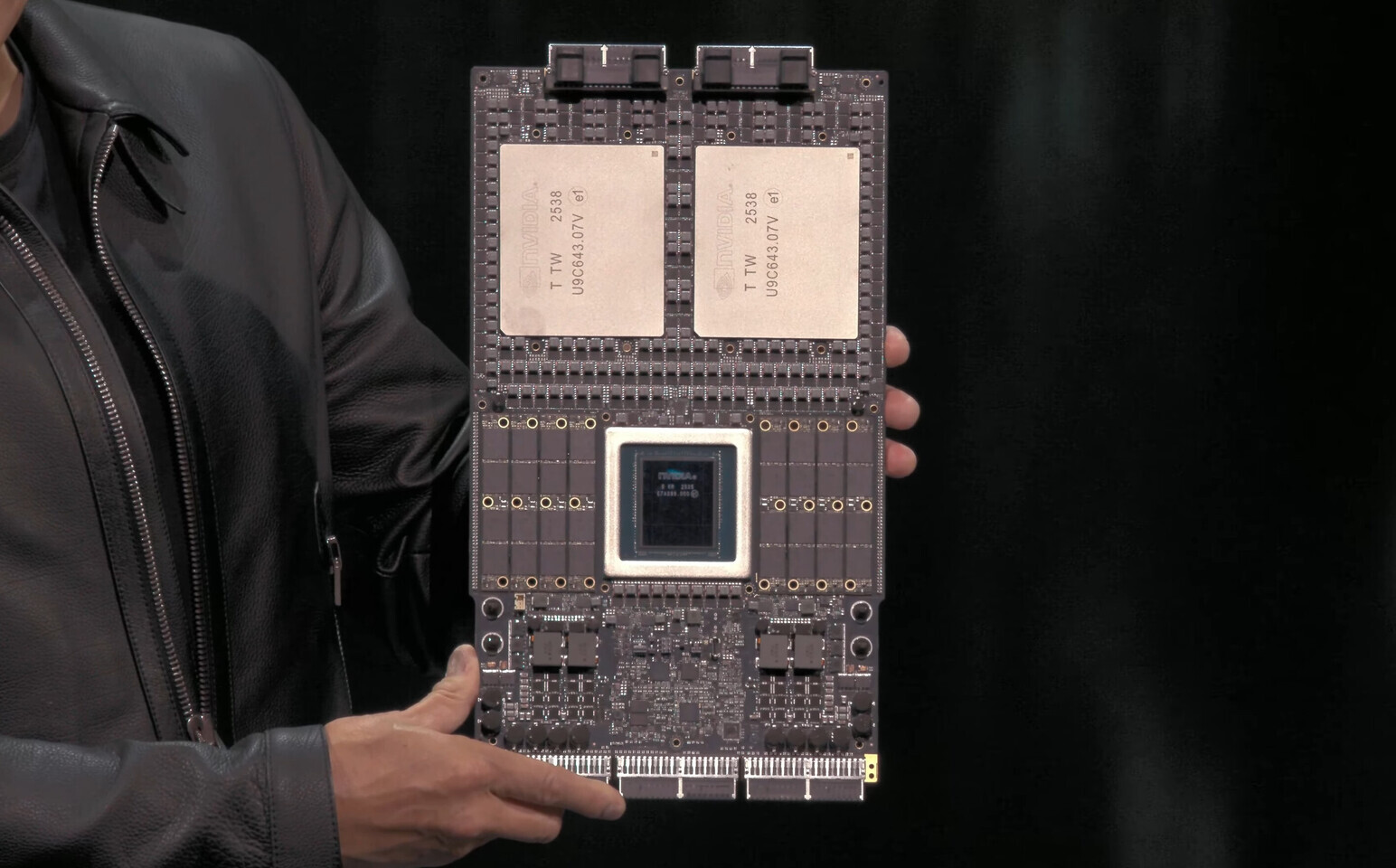

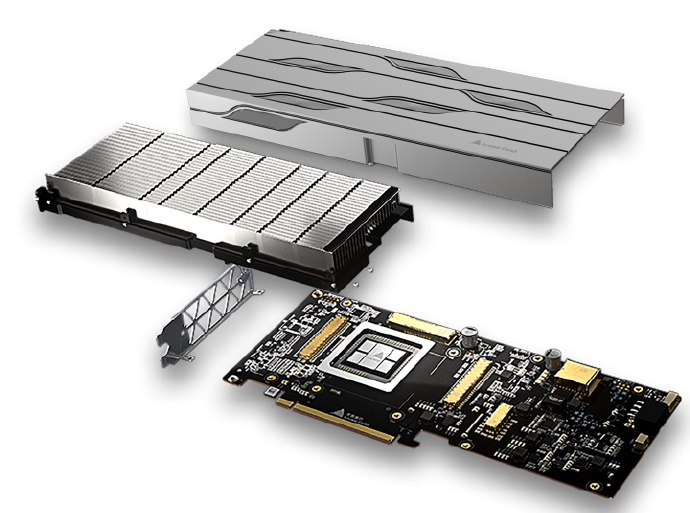

This is also not the first time we have seen domestic claims aiming directly at NVIDIA’s next platform cycle. Huawei has made its own aggressive positioning statements around rack scale competitiveness, citing systems like Atlas 950 and Atlas 960 SuperPoDs that reportedly scale to 8,192 Ascend 950 AI chips and are presented as a counterweight to NVIDIA Vera Rubin NVL144 style configurations. Those comparisons can sound compelling on paper, but they quickly become harder to defend once you factor in power envelopes, thermal design, networking, system reliability at scale, and the software ecosystem required to keep utilization high across large clusters.

The structural bottleneck remains the same one that repeatedly shows up for Chinese AI chip startups and even larger domestic players: the semiconductor ecosystem. Architecture alone does not win the market. Manufacturing capability, packaging capacity, advanced memory integration, toolchain maturity, driver stability, and developer adoption are the difference between an aspirational slide and a platform hyperscalers will actually standardize on. Even if Iluvatar CoreX has a strong architectural direction, the gap between a roadmap pledge and an ecosystem capable of matching NVIDIA’s full stack is still a massive execution challenge.

For now, this roadmap is a signal worth tracking rather than a victory lap. If Iluvatar CoreX can turn these claims into validated silicon, scalable supply, and credible software readiness, it becomes a meaningful storyline in the accelerating AI infrastructure race. If not, it risks being filed alongside a growing list of ambitious parity claims that never translated into broad market displacement.

What matters most to you when judging these next wave AI chip challengers, raw performance targets, software ecosystem maturity, or the ability to ship at scale with stable supply?